Deploy and Manage Azure Infrastructure Using Terraform, Remote State, and Azure DevOps Pipelines (YAML)

Table of Contents

Overview

In this article, I will be showing you how to create an Azure DevOps CI/CD (continuous integration / continuous deployment) Pipeline that will deploy and manage an Azure environment using Terraform. Terraform is a tool for building, changing, and versioning infrastructure safely and efficiently.

Configuration files (In our case, it will be named ‘Main.tf’) describe to Terraform how you want your environment constructed. “Terraform generates an execution plan describing what it will do to reach the desired state, and then executes it to build the described infrastructure. As the configuration changes, Terraform can determine what changed and create incremental execution plans which can be applied.”1

By creating an entire CI/CD pipeline, we can automate our infrastructure-as-code (IaC) deployment. When we have made a change to our Terraform code (adding something, removing something, changing something), the Pipeline will automatically log in to our Azure environment, add any new resources that we specified, delete any resources we want to be removed and finally, update any existing resources.

Environment

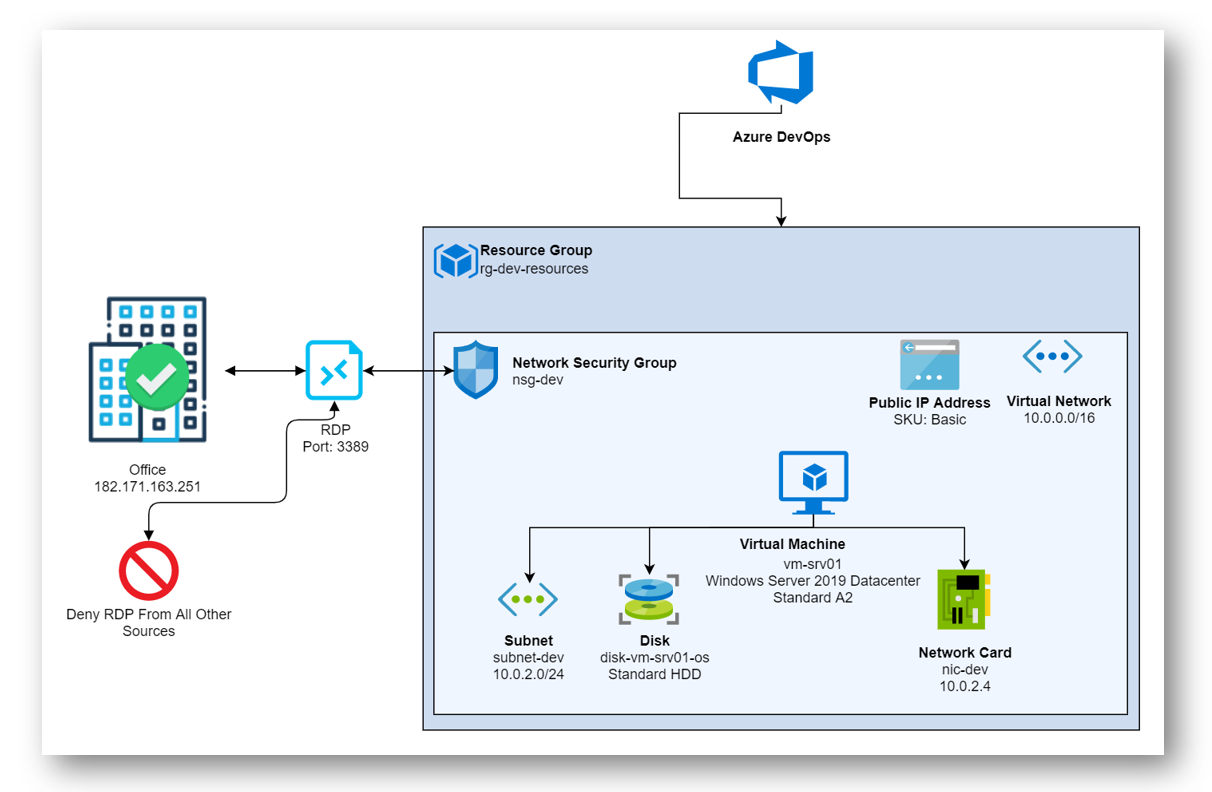

In this article, I will supply you will two terraform configuration files, “Main.tf” and “Variables.tf.” Below is a diagram on what we will have created by the end of the article.

- Resource Group

- Virtual Network

- Subnet

- Network Security Group

- Network Security Rule to allow RDP from my main office

- Network Security Rule to disallow RDP from anywhere else

- Public IP Address

- Network Interface Card (NIC)

- Server 2019 Datacenter Virtual Machine

- OS Disk for VM

State File

State Management is essential in Terraform. Think of your state file as a database for your Terraform project. The resources you describe in your configuration file are linked to actual resources (in our case, Azure resources). Remote state (storing your state file in a central location) gives you easier version control, safer storage, and allows multiple team members to access and work with it.

In our case, the Terraform state file will be stored on an Azure Storage Container that we can easily share with other IT members. It will automatically reference the remote state file for every run, so as long as we keep it where we configured, Terraform will do the rest.

If you want to learn more about Terraform State, check out this article.

Pipeline

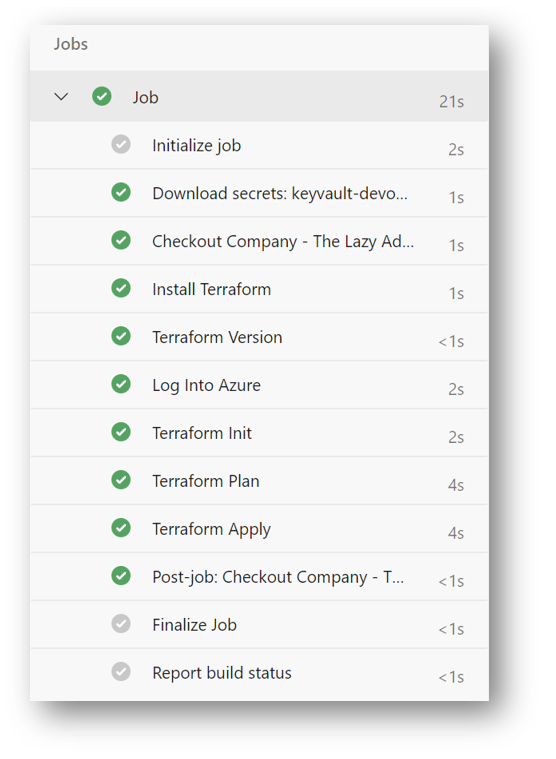

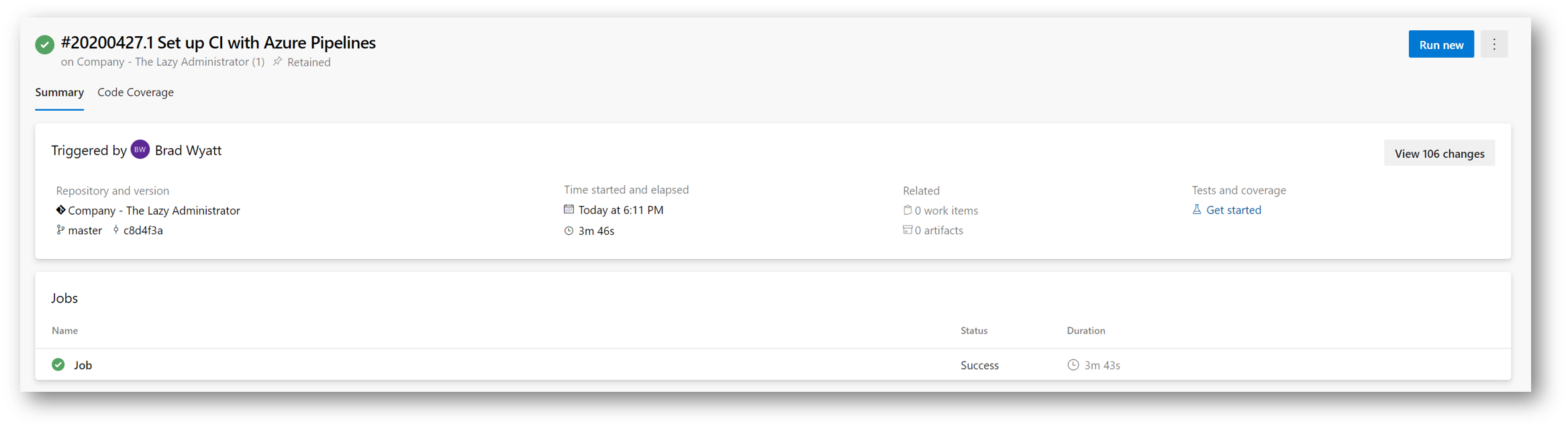

Our Azure Pipeline is what is doing all of the work for us once we sync our changed to Azure DevOps. The Pipeline will be configured in YAML instead of the classic visual editor, so it will allow us to store our pipeline configuration in our Azure DevOps repository and will enable us to take advantage of Configuration-As-Code (CaC). The Pipeline will contain multiple tasks, by the end of it, our Pipeline will do the following:

- Download Secrets: Passwords and access keys will all be stored in Azure Key Vault, so they are never in our actual source files

- Checkout: Checks out the Azure project from source control

- Install Terraform: Installs terraform so we can use it

- Log into Azure: Log into our Azure Tenant where we will be deploying and managing resources

- Terraform Init: Initializes our working directory with our Terraform configuration files

- Terraform Plan: Used to create an execution plan. Terraform performs a refresh, unless explicitly disabled, and then determines what actions are necessary to achieve the desired state specified in the configuration files

- Terraform Apply: Used to apply the changes required to reach the desired state of the configuration

- Post-Job: Clean any cached credentials

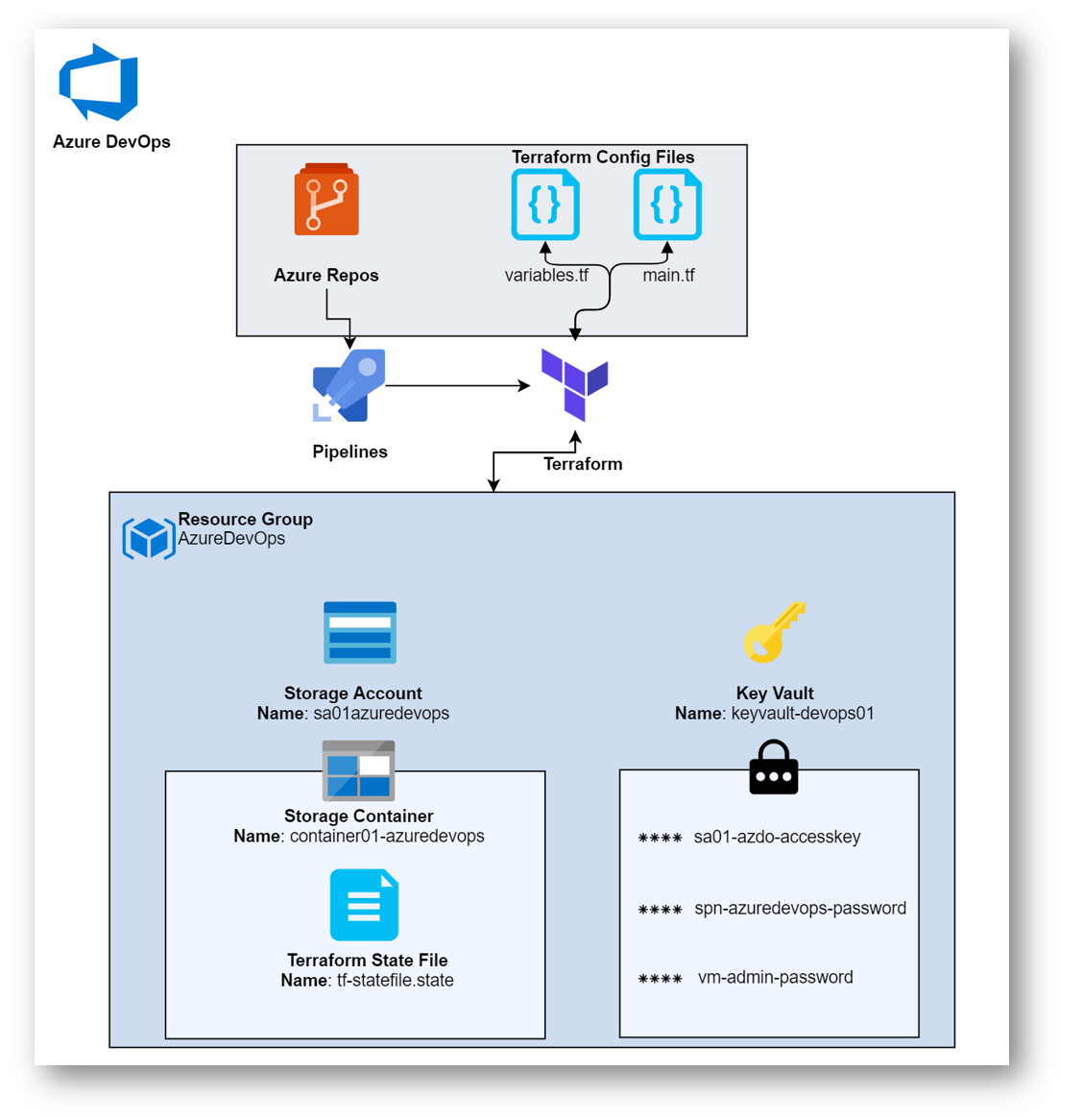

Below is an overview of the Pipeline / Terraform environment/set-up that we will have by the end of the article. All of our source files are stored in an Azure Repo. In the repository we see two terraform files – main.tf, and variables.tf. The file, “variables.tf” contains all of our variables and values that we will use in the configuration file, “Main.tf.” Terraform is smart enough to know how to use these files together in our deployment automatically.

The Resource Group, “AzureDevOps” contains a Storage Account with a Blob Container that houses our Terraform state file as well as a Key Vault that has three secrets. Terraform has access to not only the Terraform configuration files in our Azure Repo, but also the Storage Account to interact with the state file and the Key Vault to access important secrets.

Create the Service Principal Name (SPN) and Client Secret

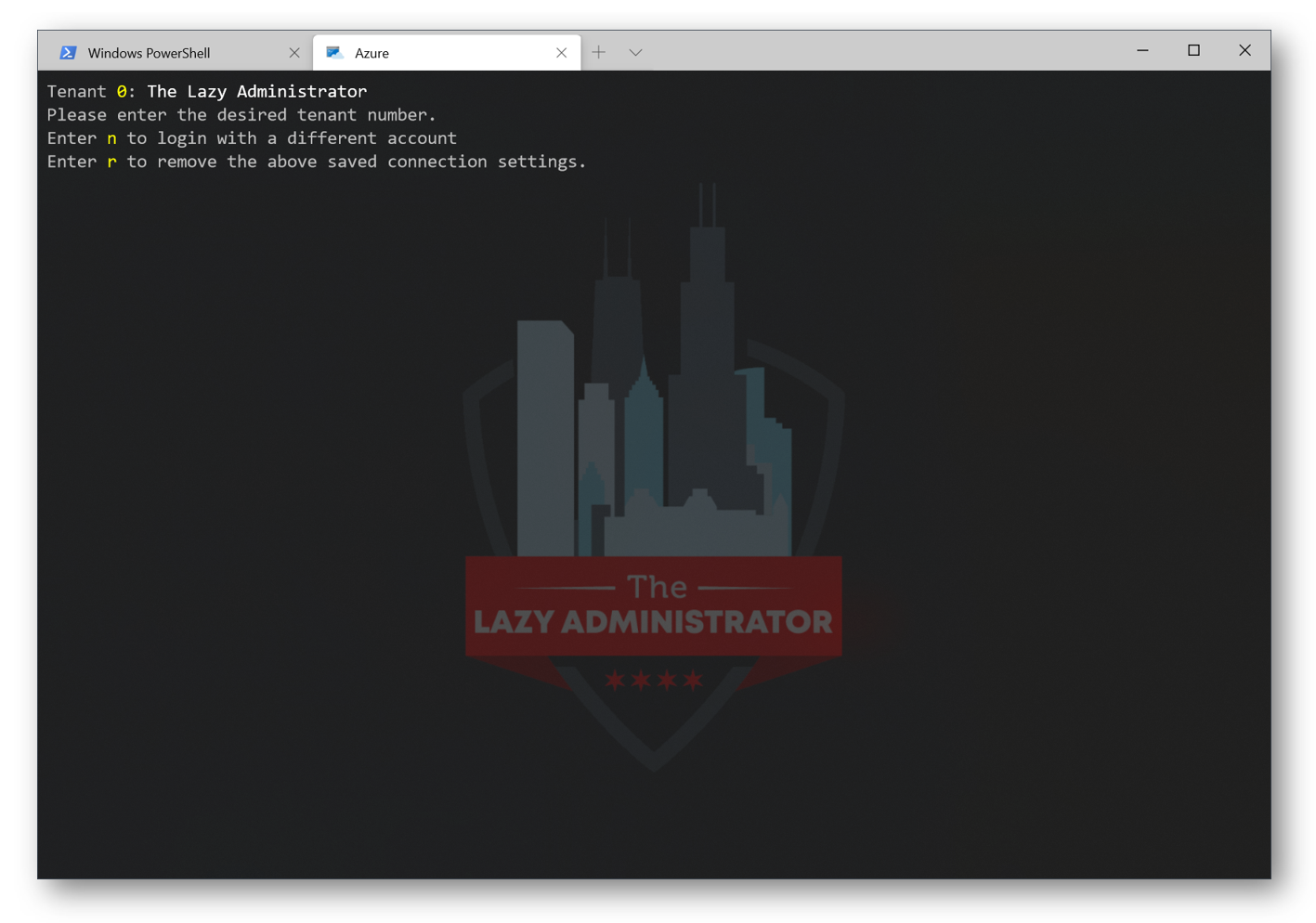

First, we must create a Service Principal Name (SPN). The SPN is the ‘account’ we will be using to connect to our Azure environment and deploy our resources. To do so, connect to Azure, in my example, I will be using the Windows Terminal. If this is your first time logging into your Azure environment in the Windows Terminal, you will need to go to microsoft.com/devicelogin to log into your tenant.

If you have already signed into your Azure environment and saved your settings, you can just select your subscription, as pictured below.

If you have already signed into your Azure environment and saved your settings, you can just select your subscription, as pictured below.

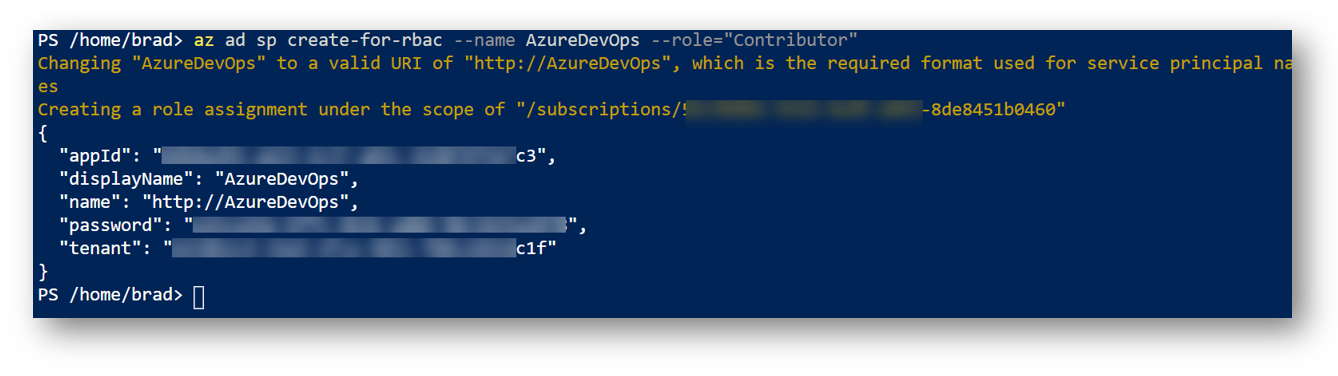

Note: For this next step, I needed to run it straight in Azure Shell and not Azure Cloud Shell. So I ran it at shell.azure.com. If you ever run into an issue with Azure and the Terminal, try running ‘az login’ and follow the instructions, even if you are already authenticated.

Now we need to create our SPN. In my example, I am going to create an SPN with the name AzureDevOps and grant it ‘Contributor’ right. Run the following command to create your service principal name:

az ad sp create-for-rbac --name AzureDevOps --role="Contributor"

IMPORTANT! Copy the following values for later: appID; password; tenant

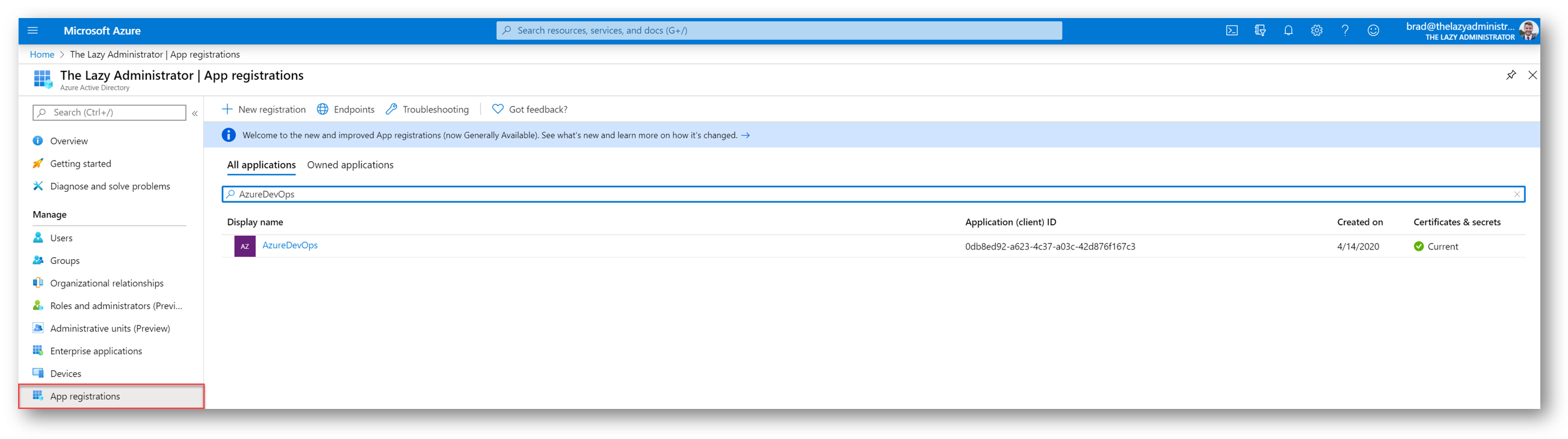

In the Azure Portal, I can go to Azure Active Directory > App Registrations > All Applications and see my SPN.

Create the Azure Resource Group and Resources

Once we finish creating our SPN, we must create our Azure Resource Group (RG) to store everything in. This will contain the storage account for our State File as well as our Key Vault.

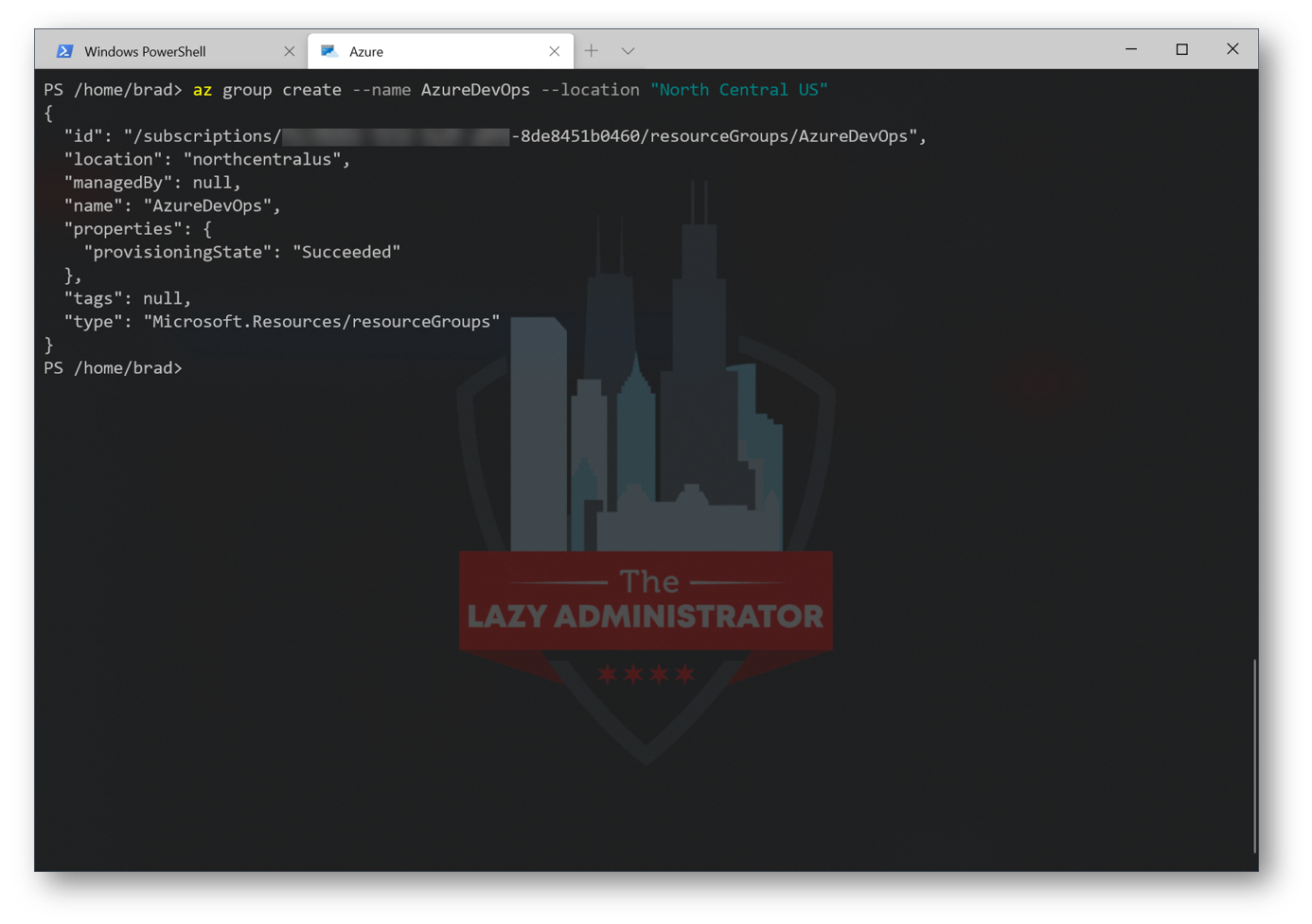

Give your RG a proper name and select a location. In my example, I am creating it in the North Central Region with the name ‘AzureDevOps.’

az group create --name AzureDevOps --location "North Central US"

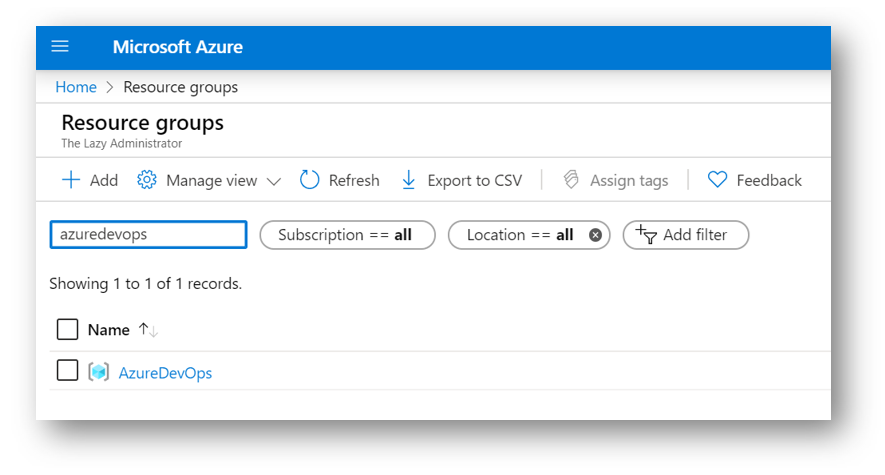

Back in the Azure Portal, I can see my newly created Resource Group.

Create the Storage Account

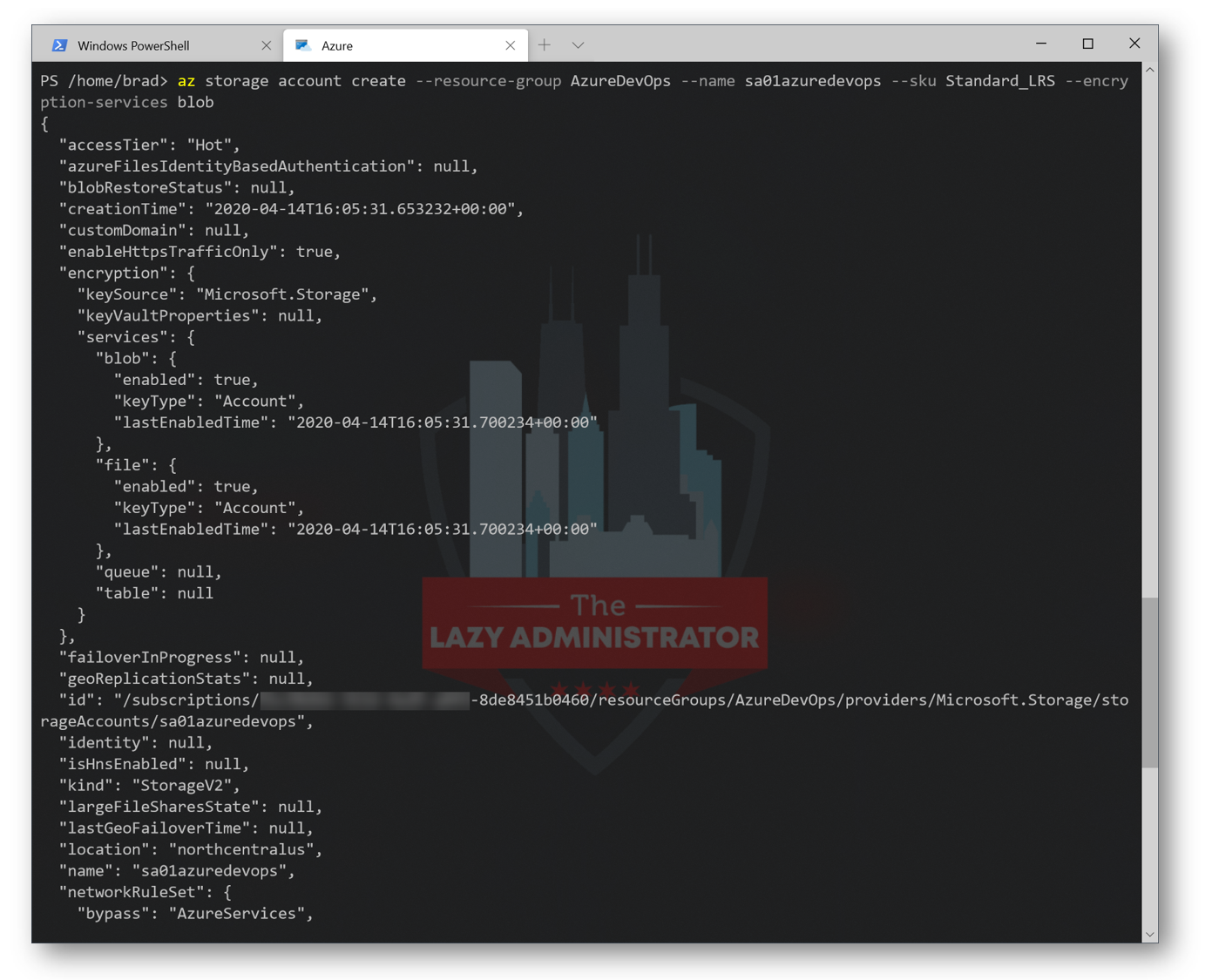

Next, we will configure a storage account in our newly created Resource Group. The storage account is going to store our Terraform state file. Below I will create a new storage account named ‘sa01azuredevops’. Using our new Resource Group Name above (In my case AzureDevOps), run the following command to create a new Storage Account (SA):

Note: Give your SA a proper name. Storage account names must be between 3 and 24 characters in length and use numbers and lower-case letters only.

az storage account create --resource-group AzureDevOps --name sa01azuredevops --sku Standard_LRS --encryption-services -blob

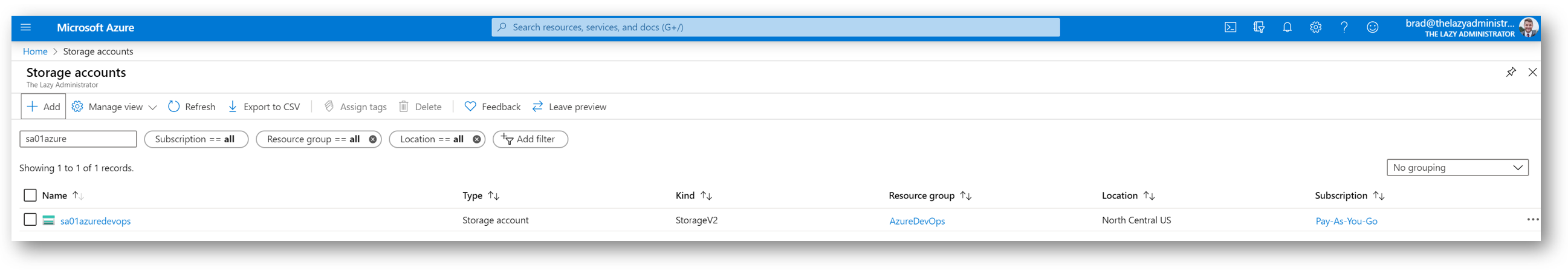

In the Azure Portal, we can see our new Storage Account, ‘sa01azuredevops’.

Get the Storage Account Key

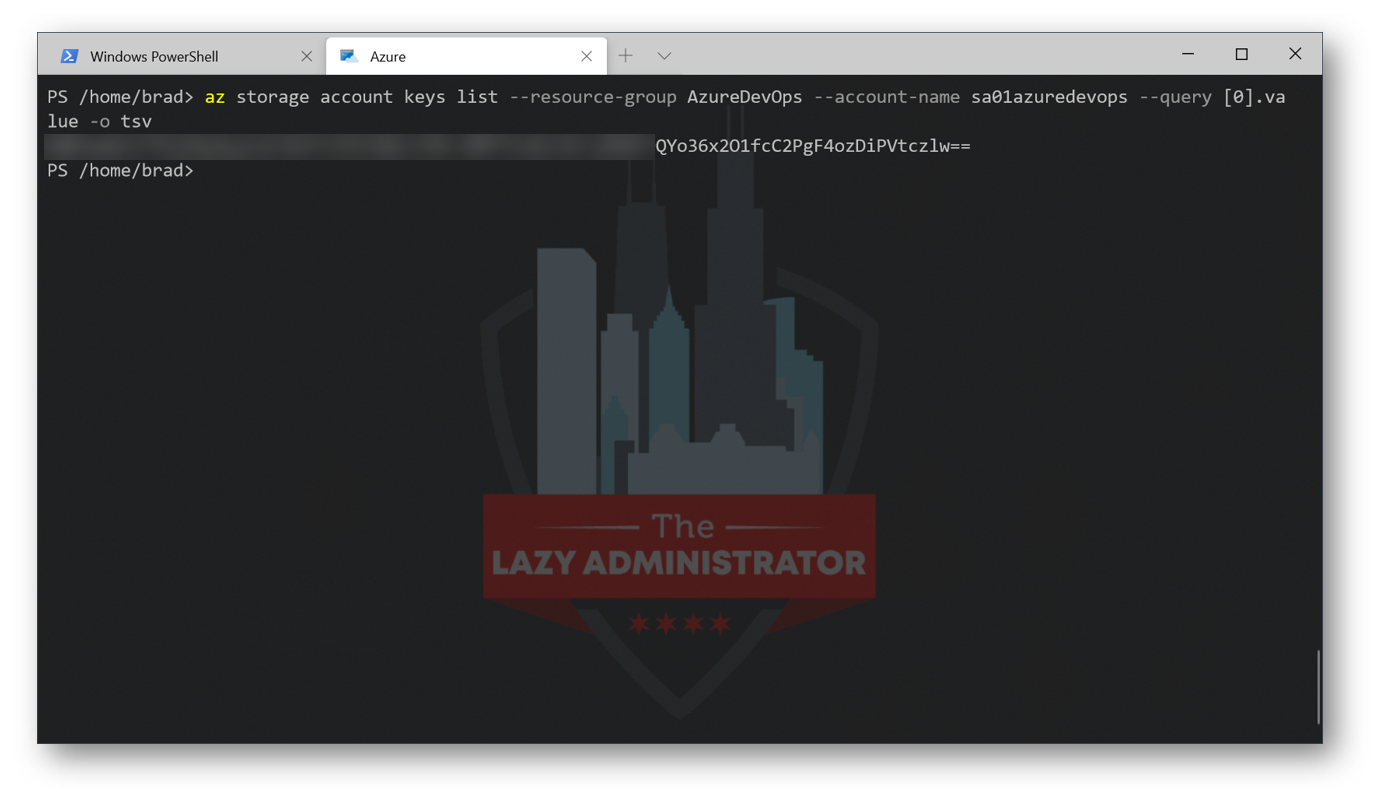

Next, we need to get the storage account key for our new SA. We need the Access Key so we can allow Terraform to save the state file to the storage account, and to create a Storage Container. Run the following command:

az storage account keys list --resource-group AzureDevOps --account-name sa01azuredevops --query [0].value -o tsv

IMPORTANT: Store the access key in the same spot as you stored the appid, password, and tenant earlier. You will need to reference it later

Create the Storage Container

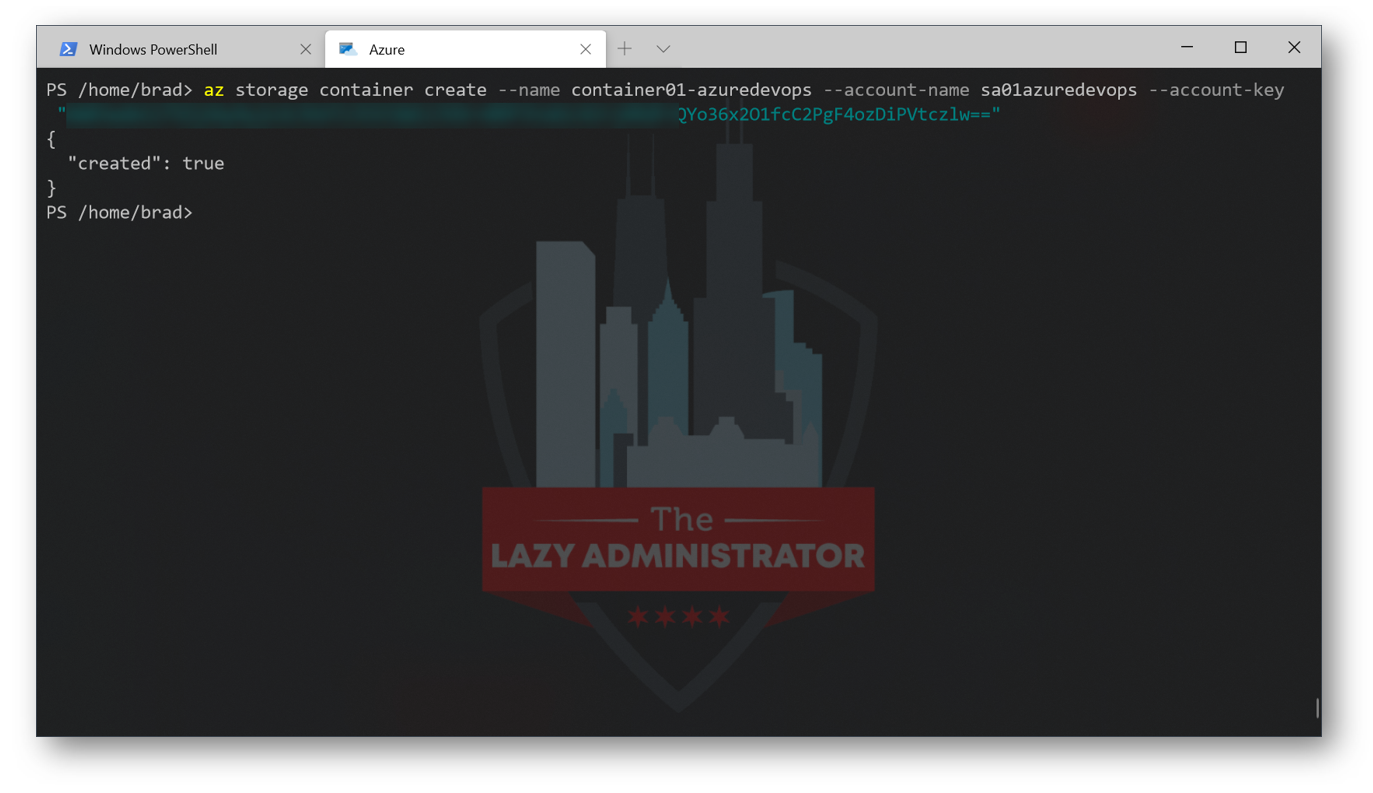

Using that storage key, we will now create a Blob container. In my example, I will create a storage container named ‘container01-azuredevops’. Run the following command:

az storage container create --name container01-azuredevops --account-name sa01azuredevops --account-key "AWB3DFSDFwdml17DiJCXJ2WliJVfsdfnA3/AJr666756o36xC2PgdfdfF4odfdfczlw=="

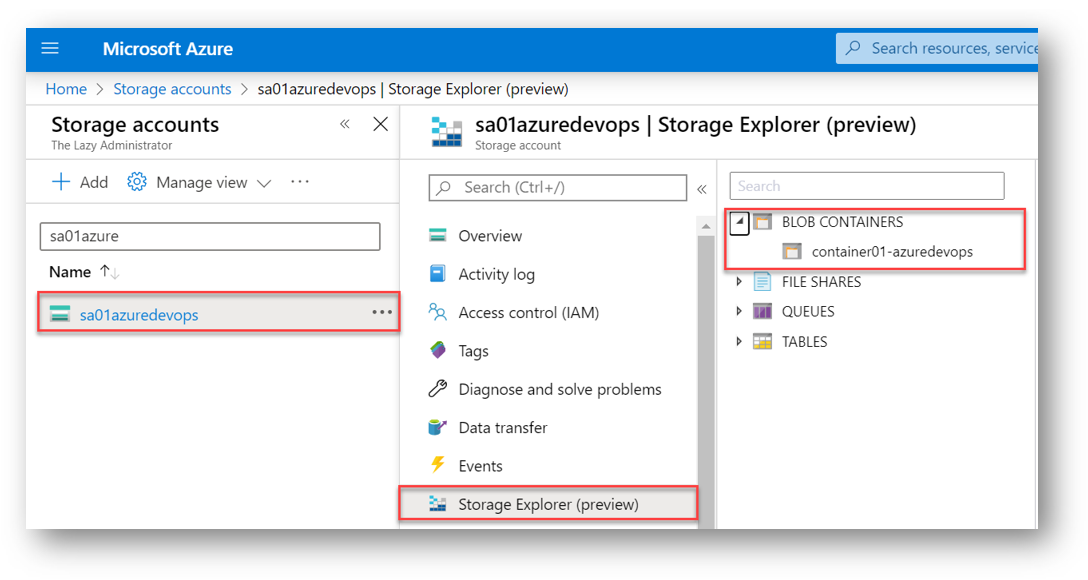

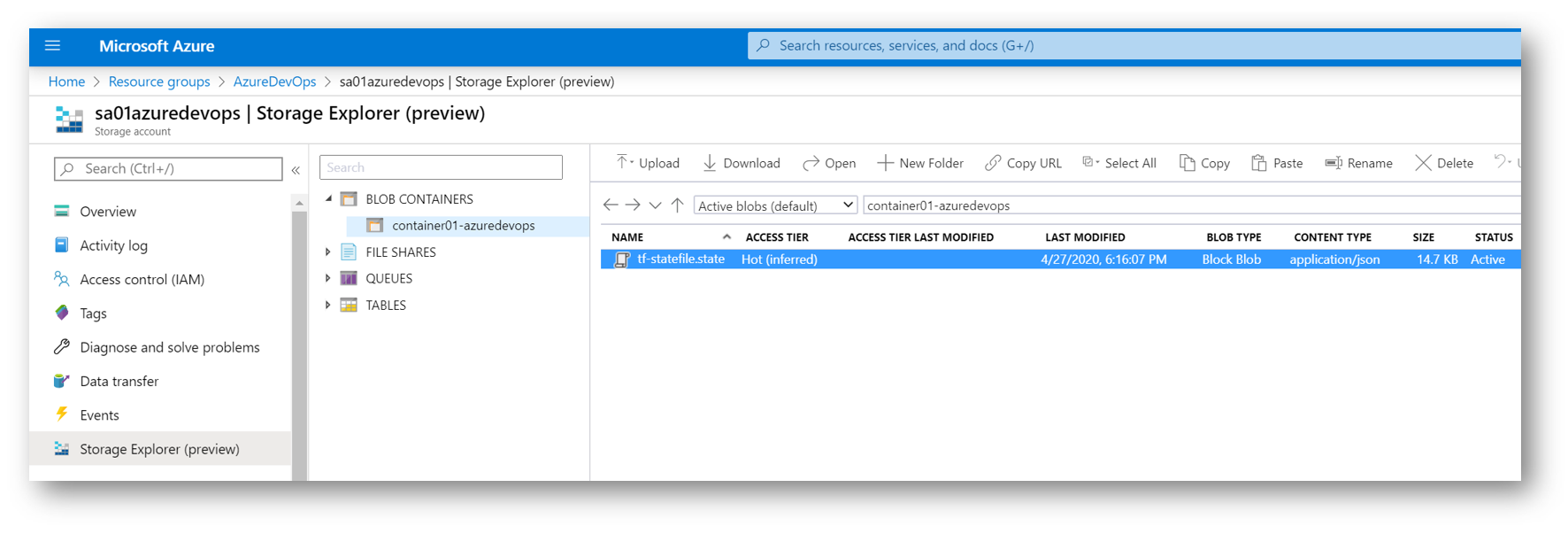

Now in the Azure Portal, I can go into the Storage Account and select Storage Explorer and expand Blob Containers to see my newly created Blob Storage Container.

Create the Key Vault

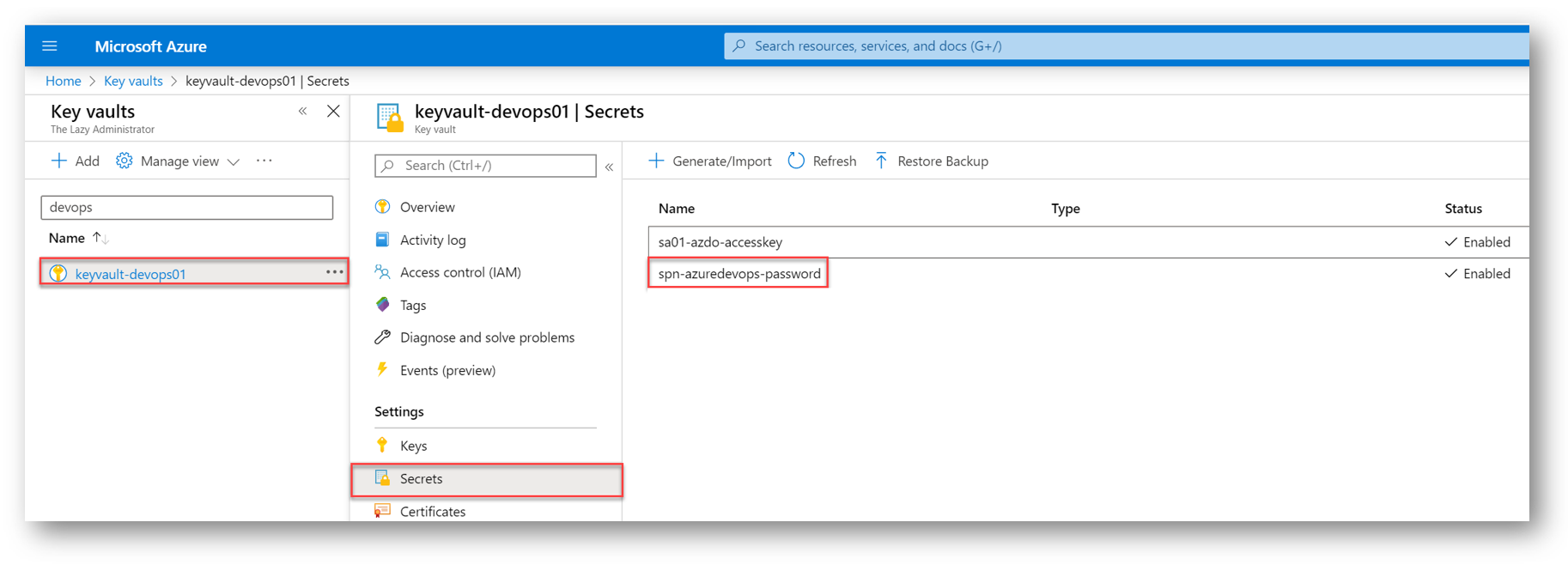

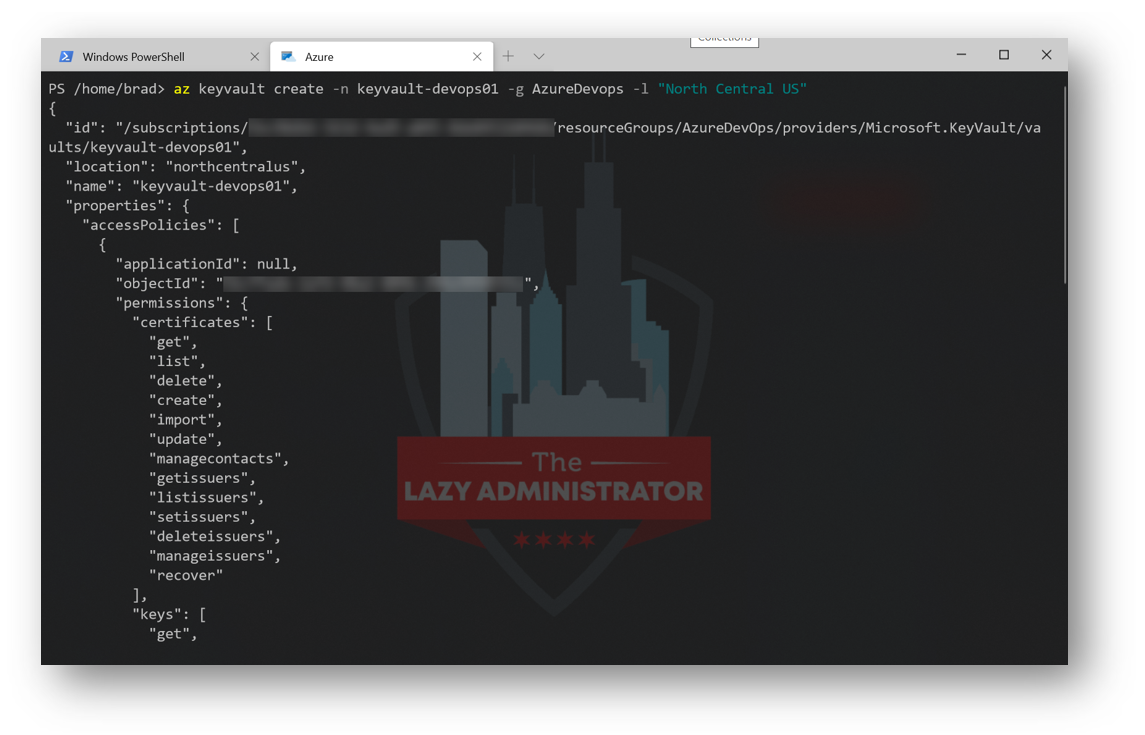

Next, we will create an Azure Key Vault in our resource group for our Pipeline to access secrets. In my example, my Key Vault will be named ‘keyvault-devops01’ and will be located in the North Central US region. -g specifies the Resource Group that it will be placed in. The Resource Group “AzureDevops” was created earlier. Run the following command to create your Azure Key Vault:

az keyvault create -n keyvault-devops01 -g AzureDevops -l "North Central US"

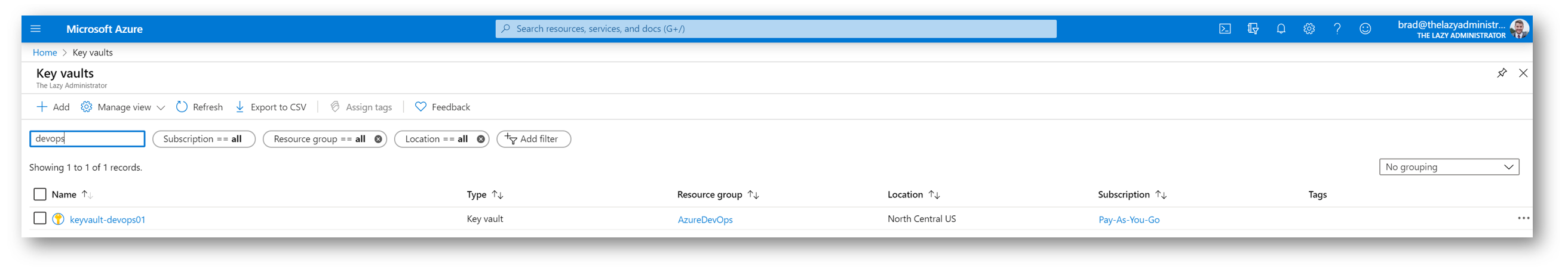

Flipping back to the Azure Portal, I can see the new Key Vault

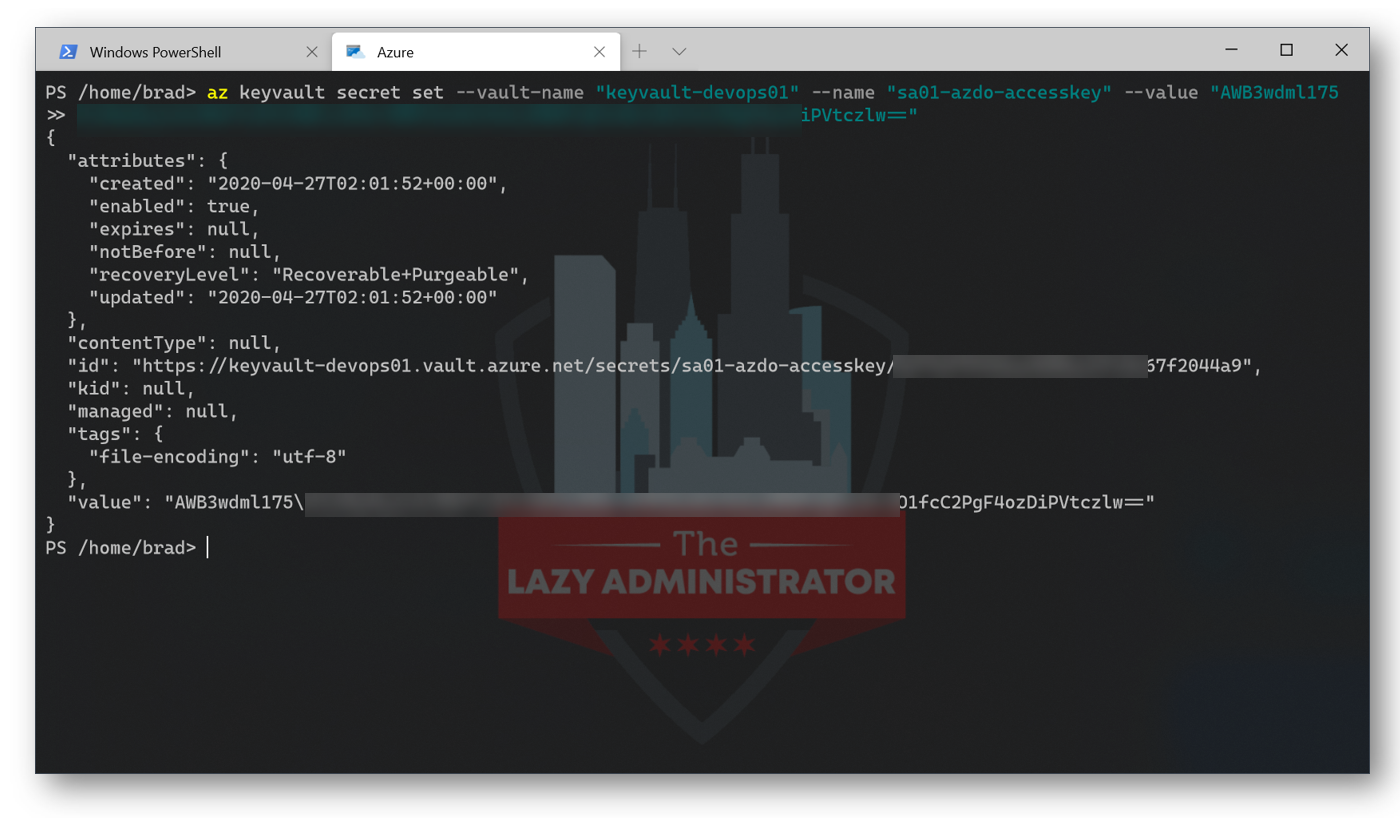

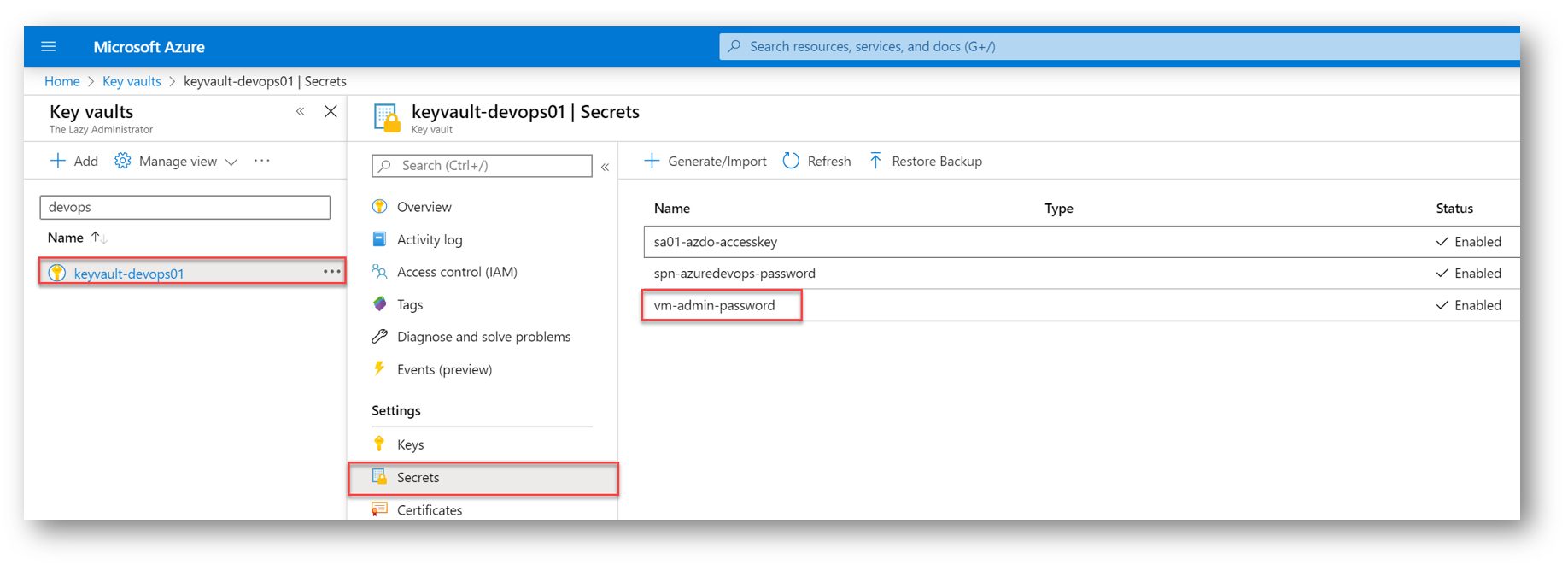

Add the Storage Account Access Key to Key Vault

Next, we will need to add the Storage Account access key you obtained earlier to your new Azure Key Vault. The Access key should end with ==. Below I will be adding my access key to my storage account to my key vault, and the entry will be named ‘sa01-azdo-accesskey’.

az keyvault secret set --vault-name "keyvault-devops01" --name "sa01-azdo-accesskey" --value "AWB3wdmMNSbdjnJKBkr+09PJtnA3/AJrj4RdFdjkBNFkjsdfkVtczlw=="

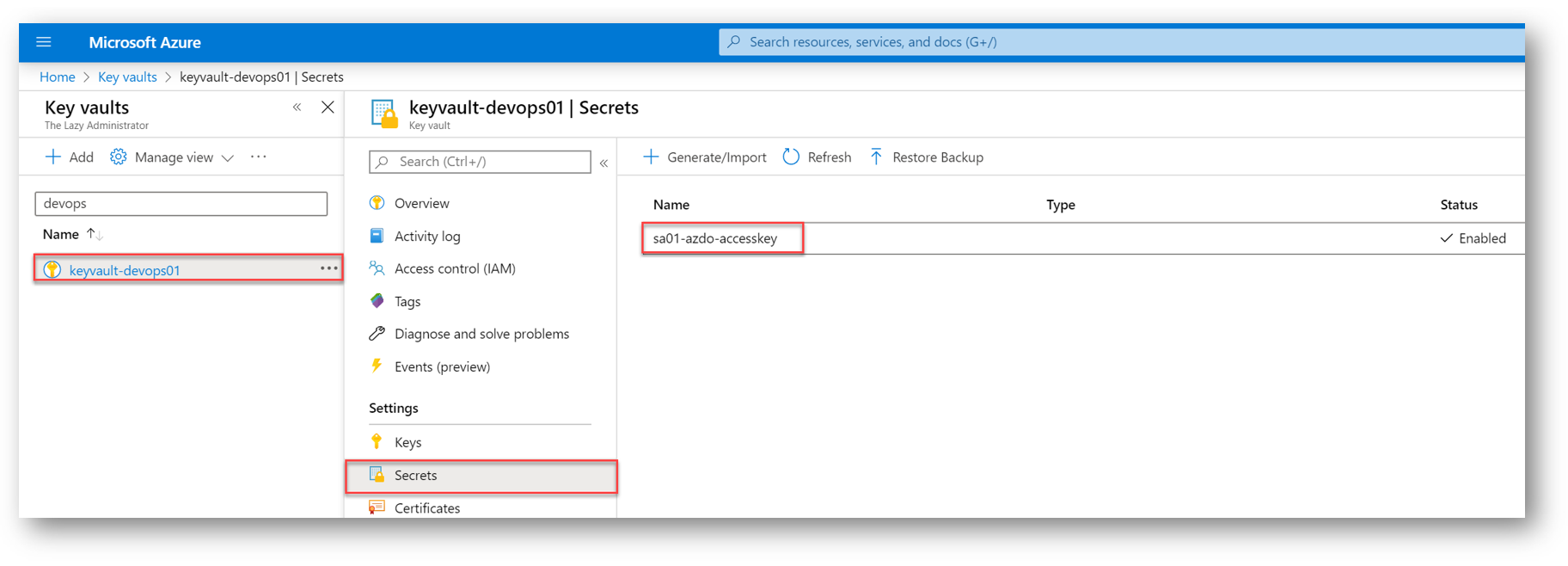

In the Key Vault under Secrets, I can now see my access key secret to my Storage Account

Add the Service Principal Password to Key Vault

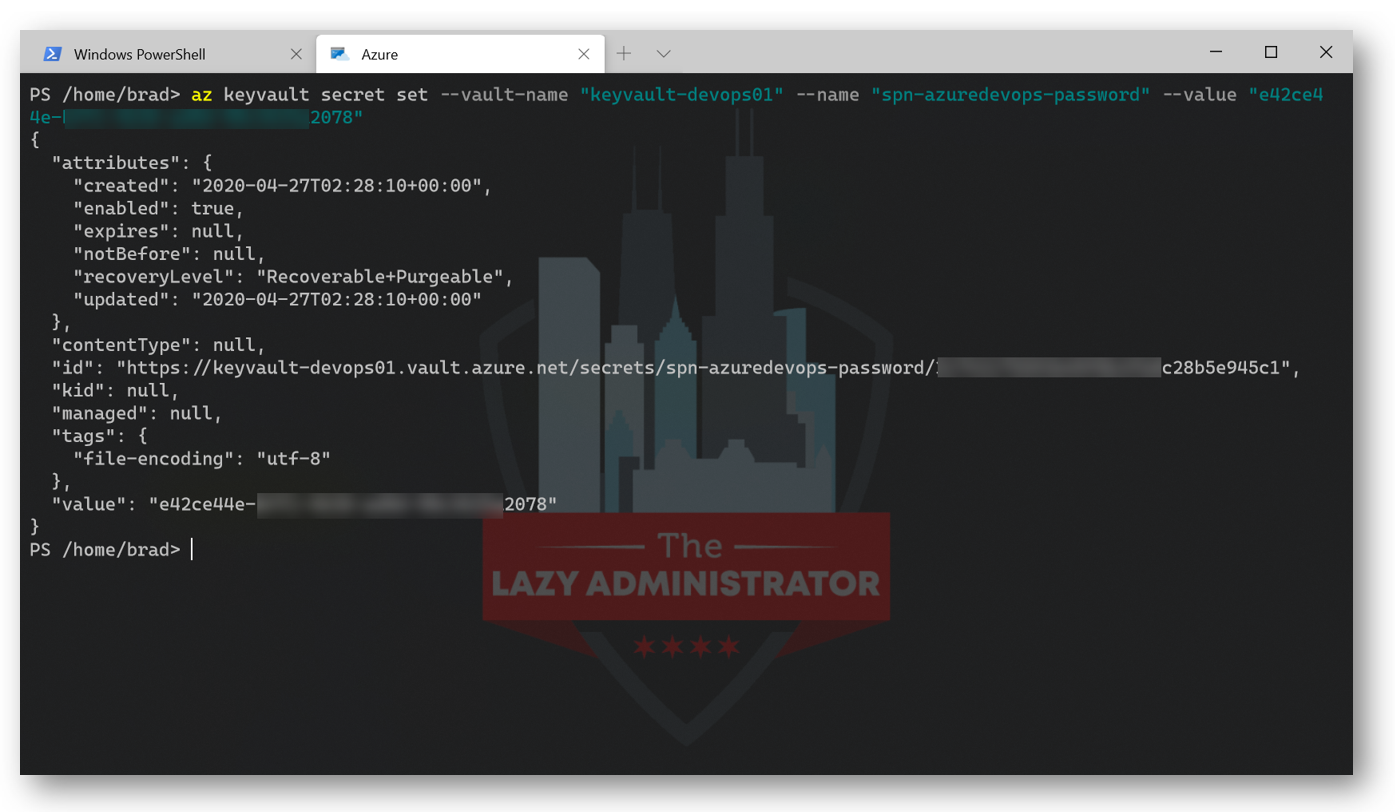

Now we need to add our Service Principal Name (SPN) password to our Key Vault. Here I will add my SPN’s password in an entry named ‘spn-azuredevops-password.’

az keyvault secret set --vault-name "keyvault-devops01" --name "spn-azuredevops-password" --value "e42ce33r-bff1-4c26-ad0d-98456078"

In the Key Vault, I can now see my secret for my SPN

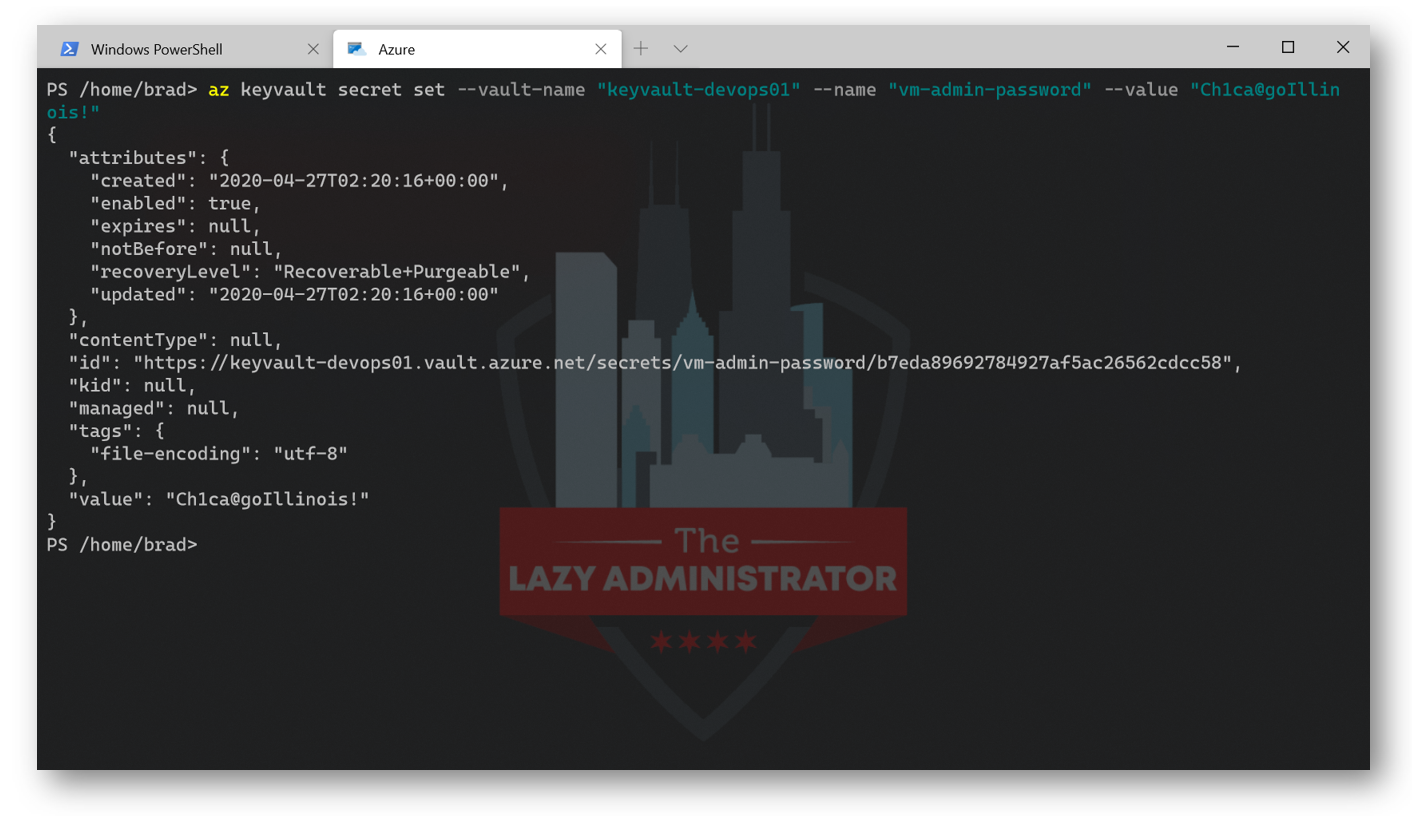

Add the VM Admin Password to Key Vault

Next, we need to add our virtual machines administrator password to our Azure Key Vault. I am going to set my virtual machine’s admin password as Ch1c@goIllinois!

az keyvault secret set --vault-name "keyvault-devops01" --name "vm-admin-password" --value "Ch1c@goIllinois!"

And finally, our last secret is safely in our Azure Key Vault

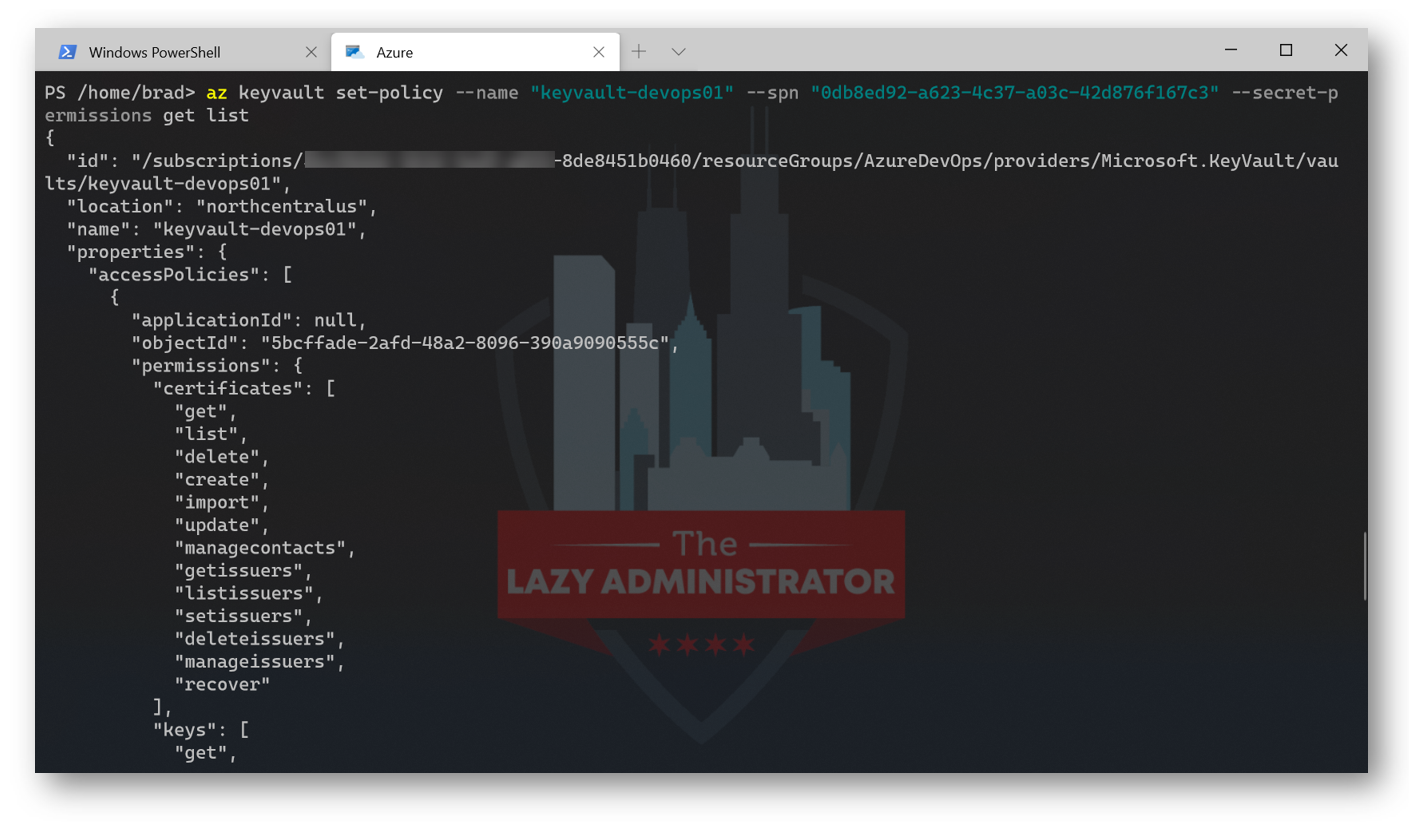

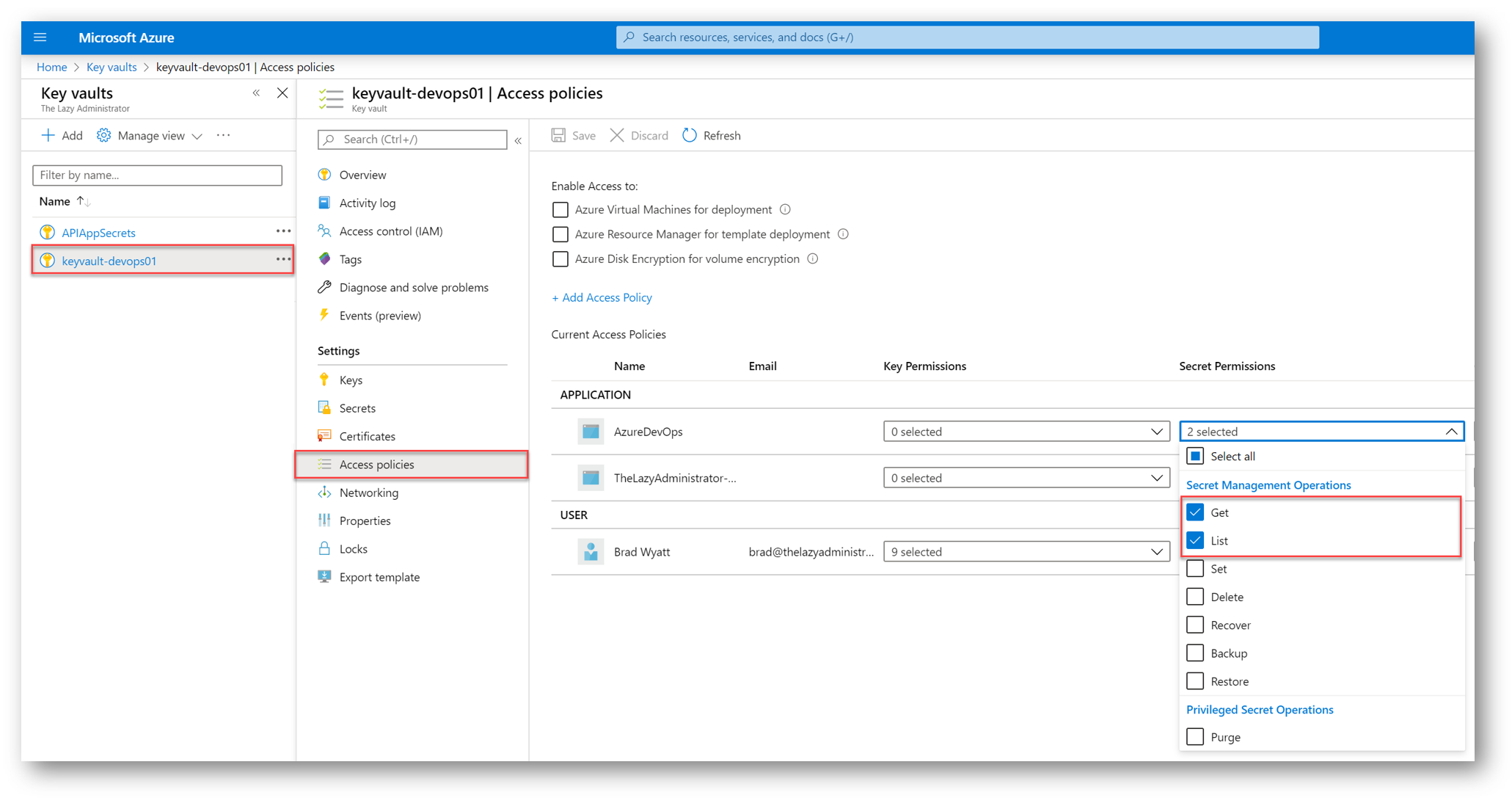

Allow the SPN Access to Key Vault

Next, we need to allow our SPN access to the Key Vault and its secrets. Using the appID we got earlier when we created our new SPN, run the following code to grant your SPN GET, and LIST permissions to your Key Vault.

az keyvault set-policy --name "keyvault-devops01" --spn "0db8ed92-a623-4c37-a03c-42d8398f7c3" --secret-permissions get list

In our Key Vault under Access Policies, we can now see that our SPN, ‘AzureDevOps,’ has Get and List permissions.

Create and Configure Azure DevOps

If you don’t already have your Azure DevOps organization set up, head on over to dev.azure.com and create your organization. Once the organization is created, you can create a Team Project.

Note: Microsoft recommends only to create One Team Project per organization. Within the one Team Project, you can create multiple projects by creating Teams. You can also create a new repository per project, and each project will also contain its own Azure DevOps board.

Create the Organization

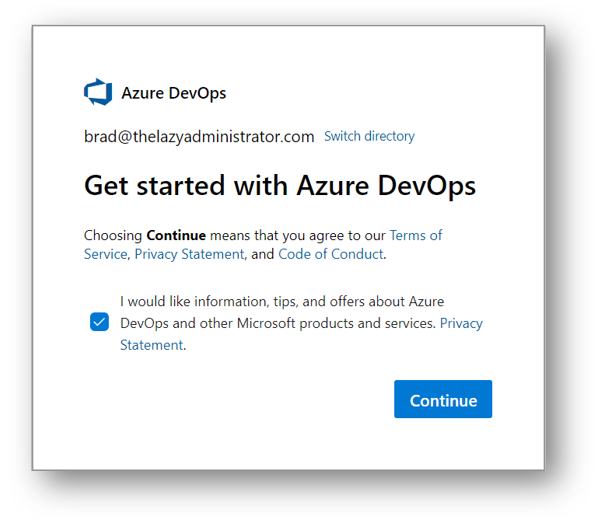

When you go to dev.azure.com, you will be presented with accepting the terms and conditions. Select Continue

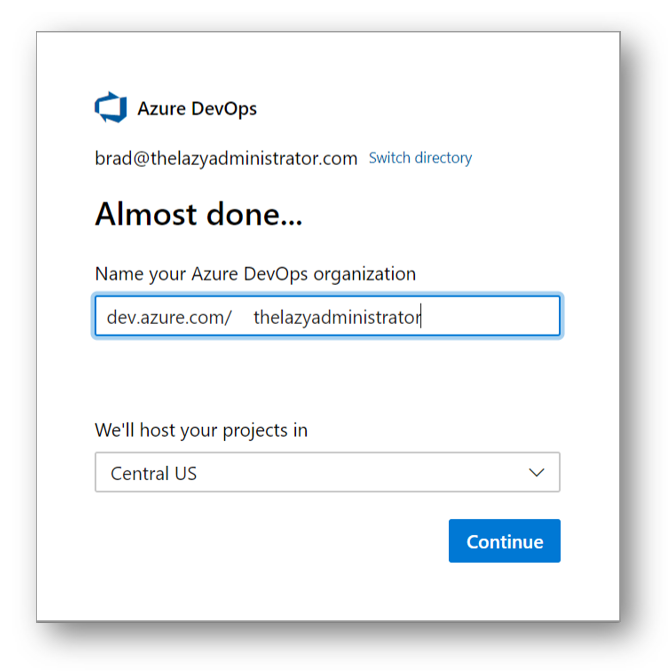

Next, create a name for your Azure DevOps organization and a region. I am going to create an organization named TheLazyAdministrator

Create the Team Project

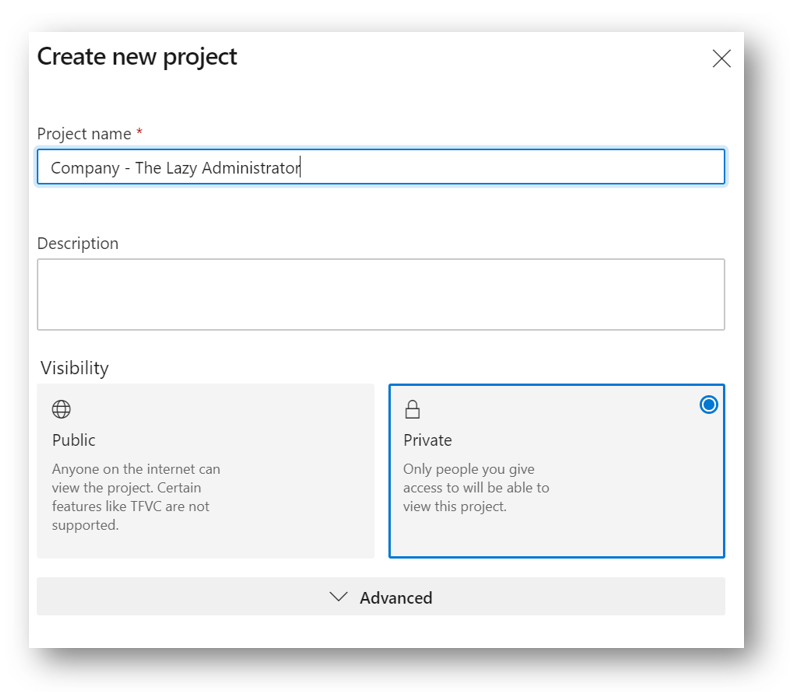

Next, we will create a Team Project. Give your Project a Name and select Public or Private. I created a Team Project called “Company – The Lazy Administrator”

Create the Repository

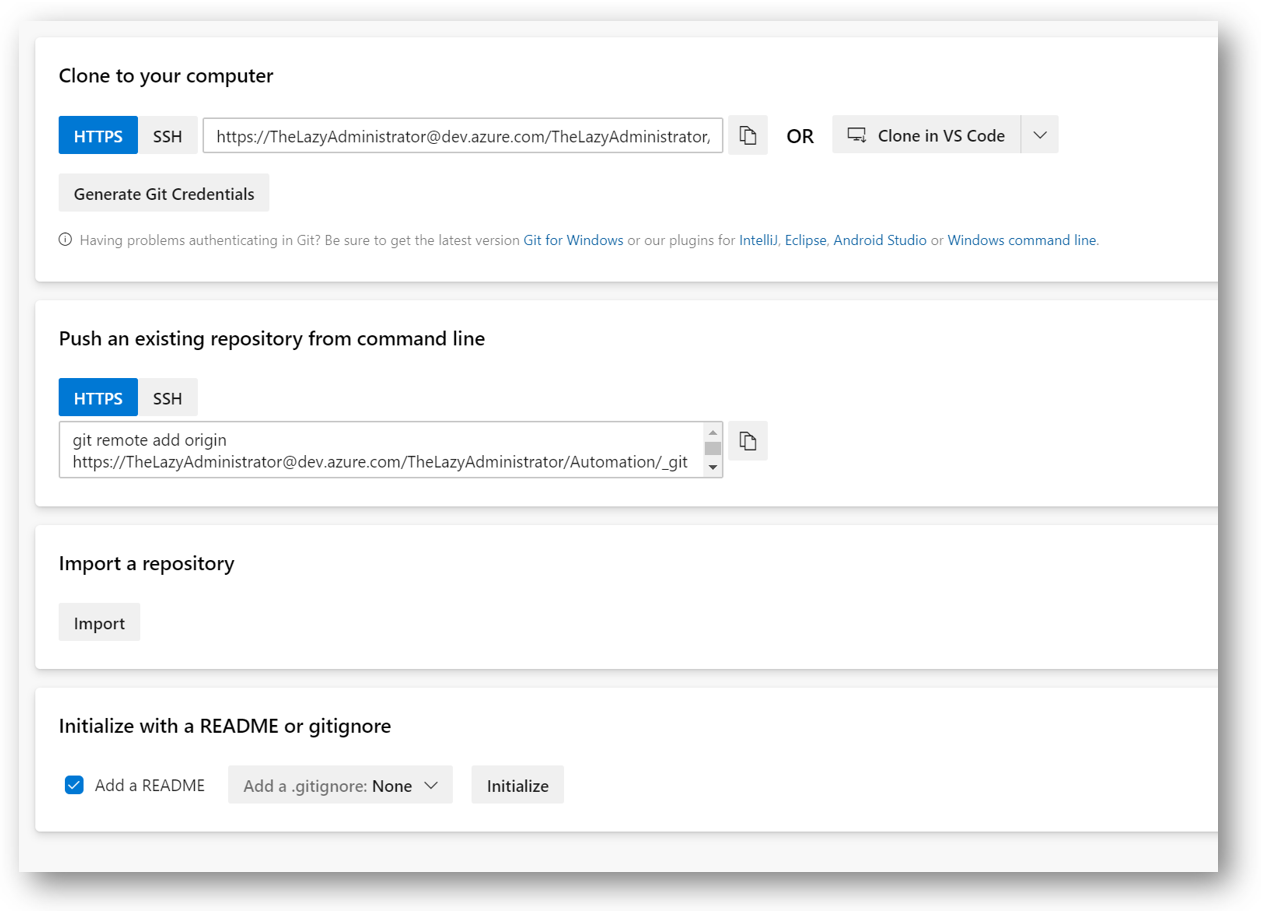

Click your new Team Project and select Repos. Click Initialize to create a blank repository

Create the Variable Group

Next, we need to create a Variable Group to store values and make available across multiple pipelines. First, we will need to create a variable group:

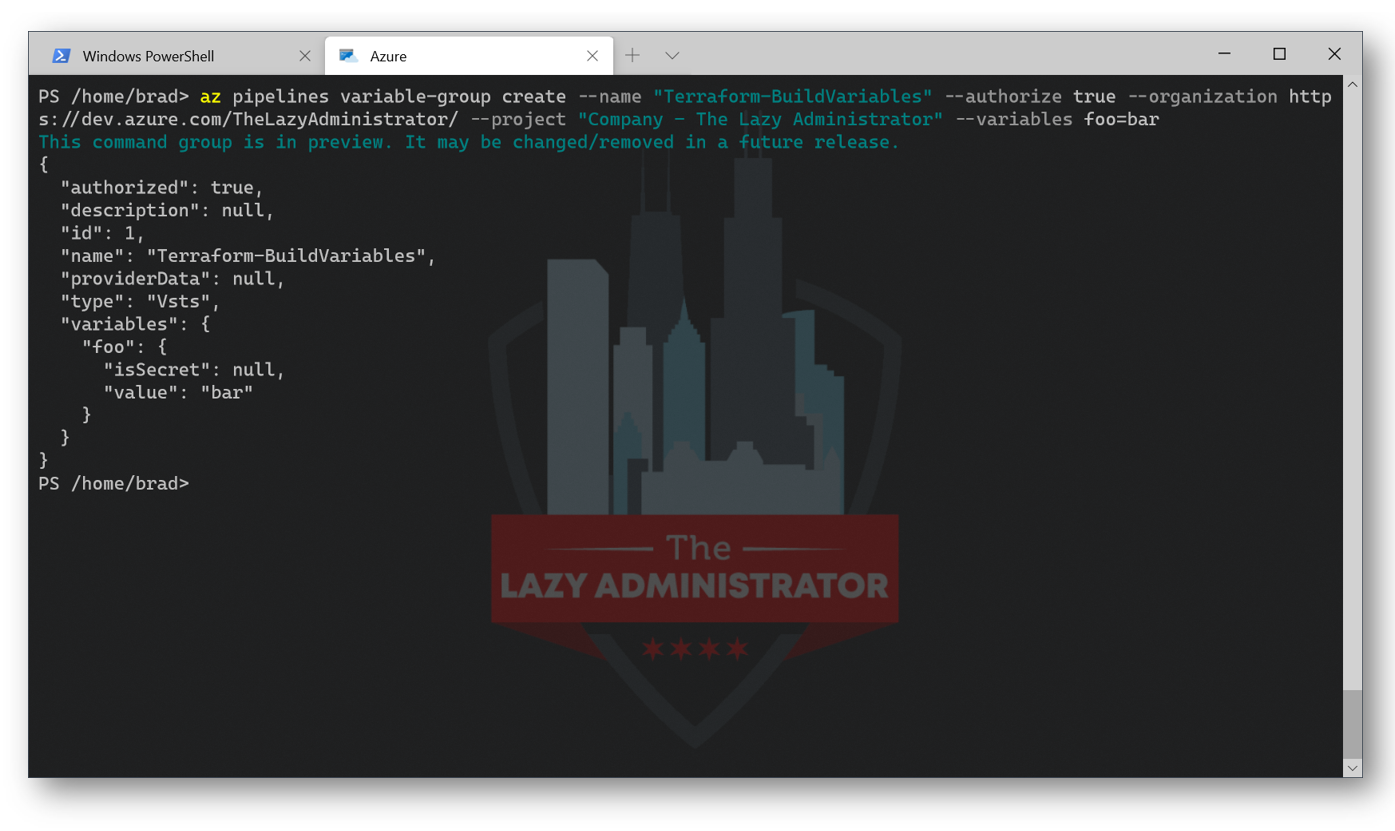

az pipelines variable-group create --name "Terraform-BuildVariables" --authorize true --organization https://dev.azure.com/TheLazyAdministrator/ --project "Company - The Lazy Administrator" --variables foo=bar

NOTE: The organization is my Azure DevOps organization URL and the Project is my Team Project I created earlier. The foo=bar variable isn’t used, but a single variable is required to first create the variable group as noted in Adam the Automator’s blog – be sure to check it out!

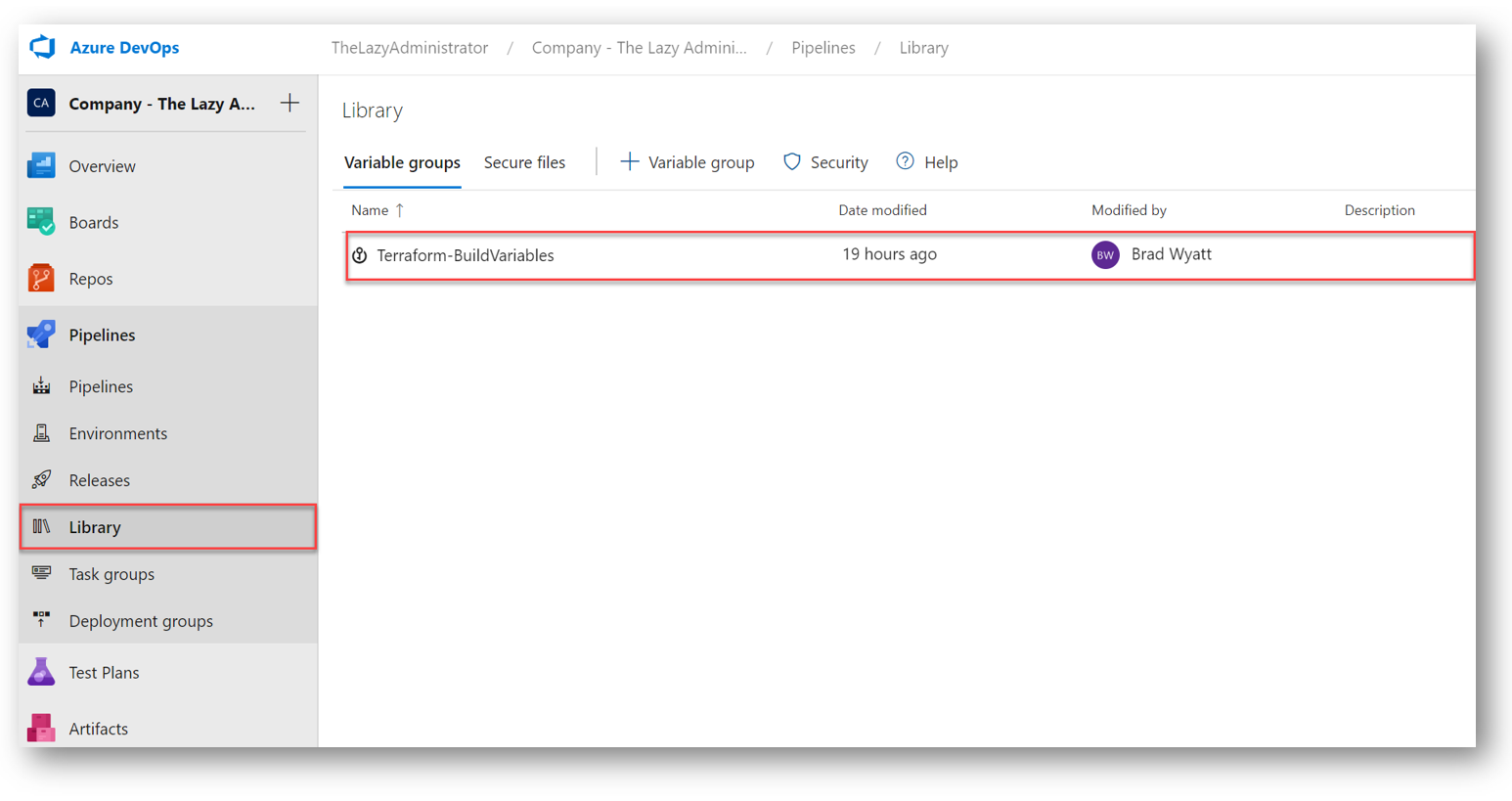

In Azure DevOps under Pipelines > Library I can now see my new Variable Group

Connect the Variable Group to Key Vault

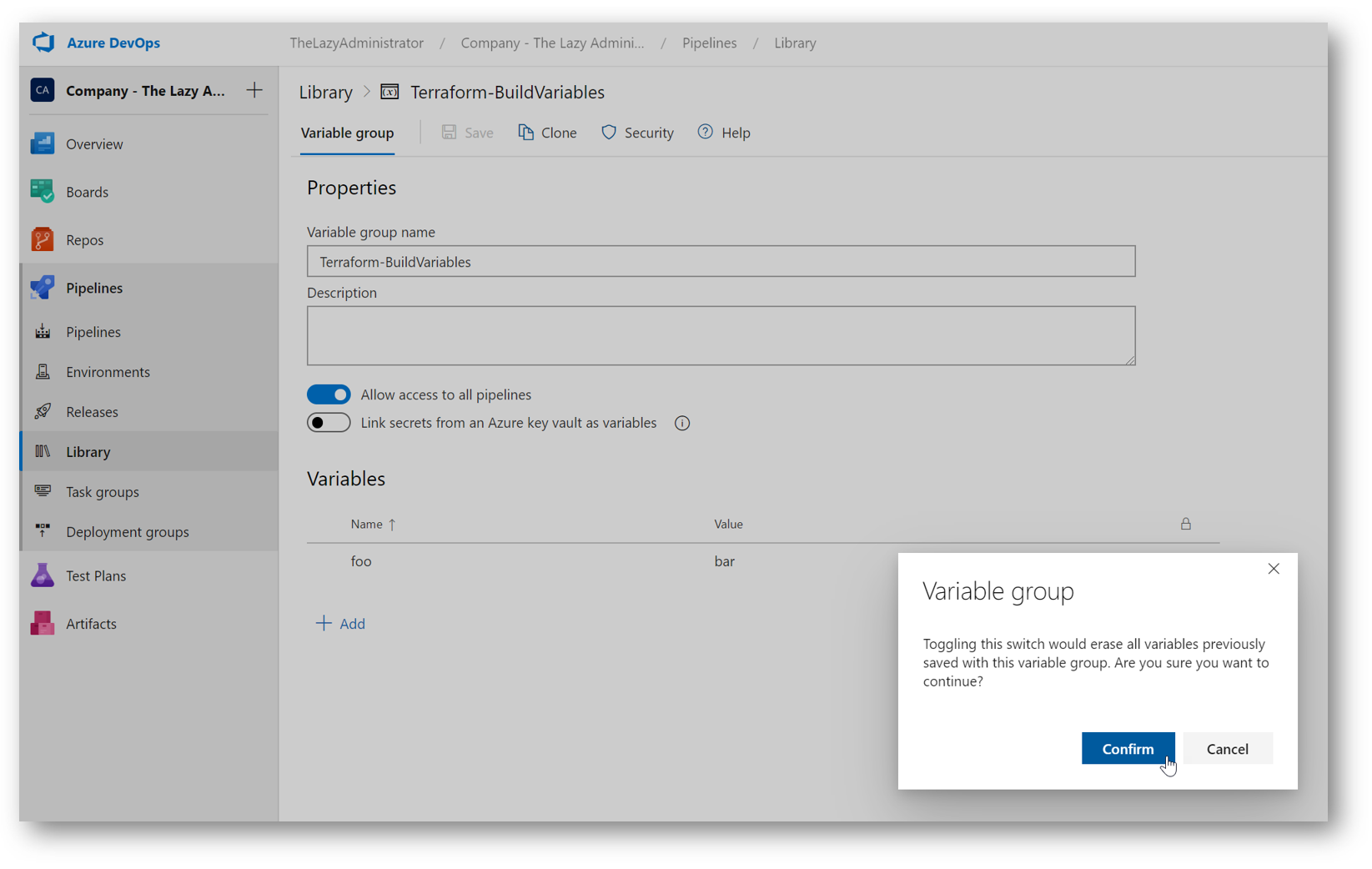

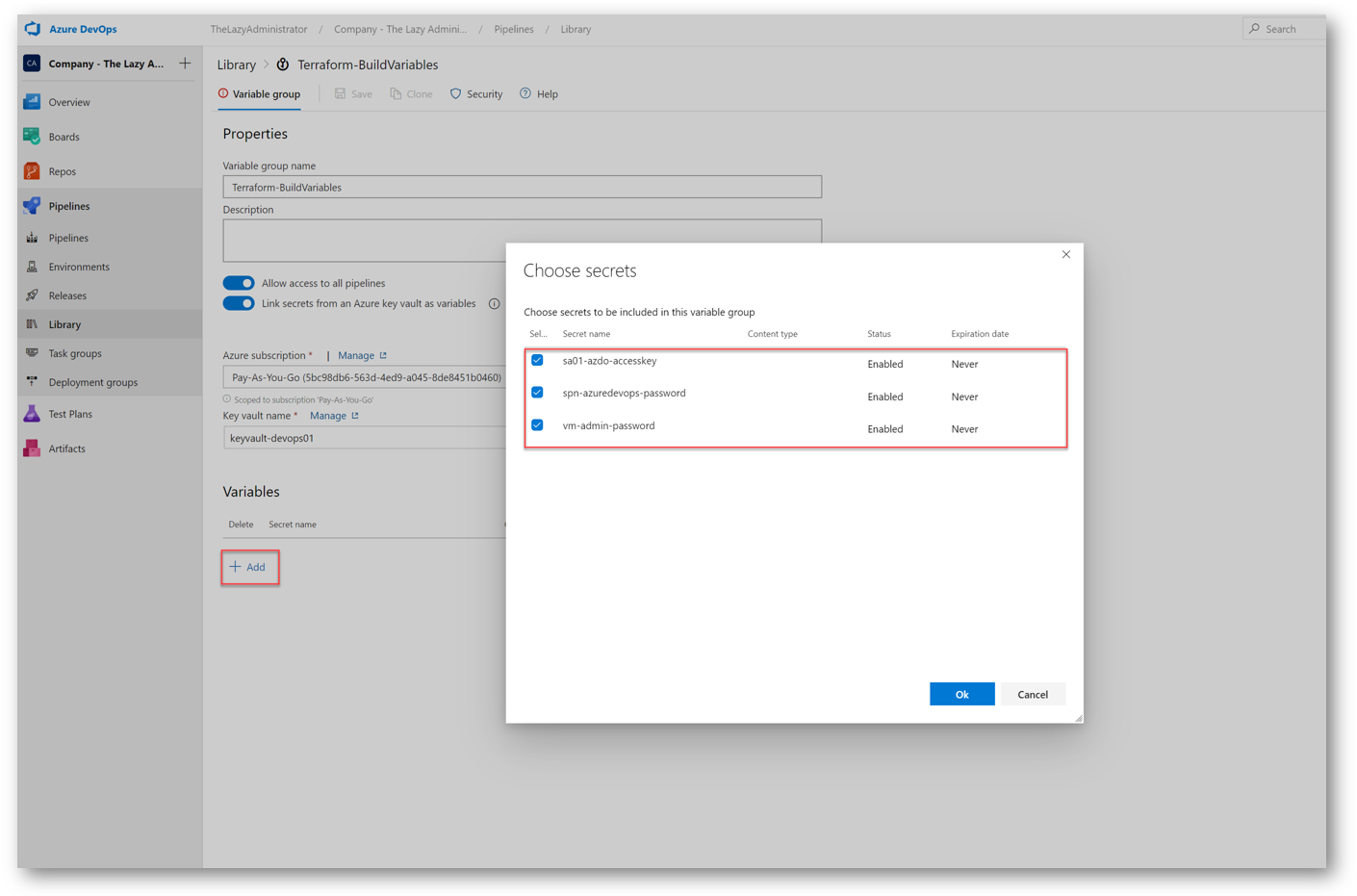

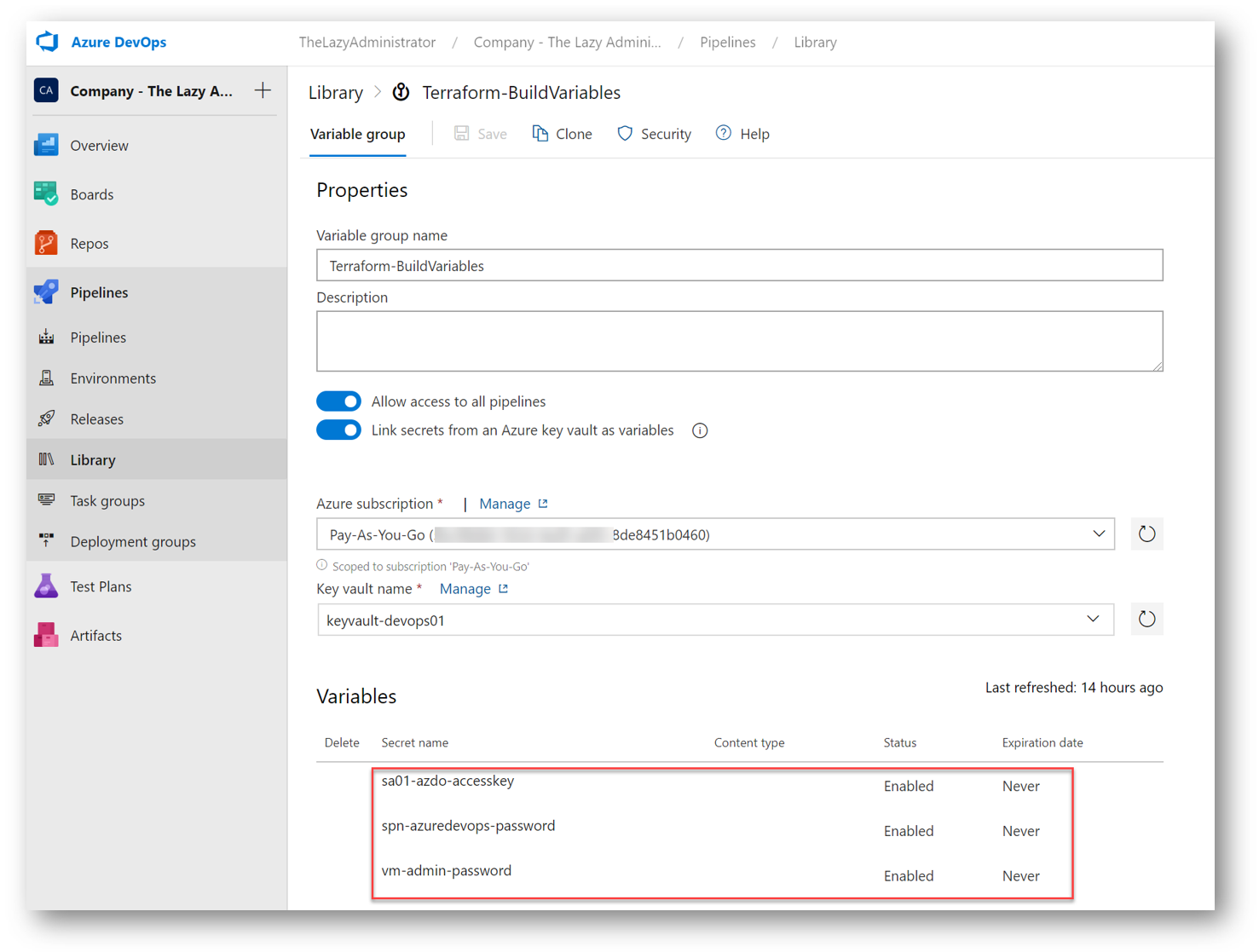

Next, we need to link our Key Vault secrets to our Variable Group. This will automatically create variables for all of our secrets. The Name of the variable will be the key vault secret entry, and the value of the variable will be the secret. In the Azure DevOps portal (dev.azure.com), navigate to your organization and then your Team Project. Go to Pipelines > Library and select your newly created variable group. Toggle the “Link secrets from an Azure key vault as variables.”

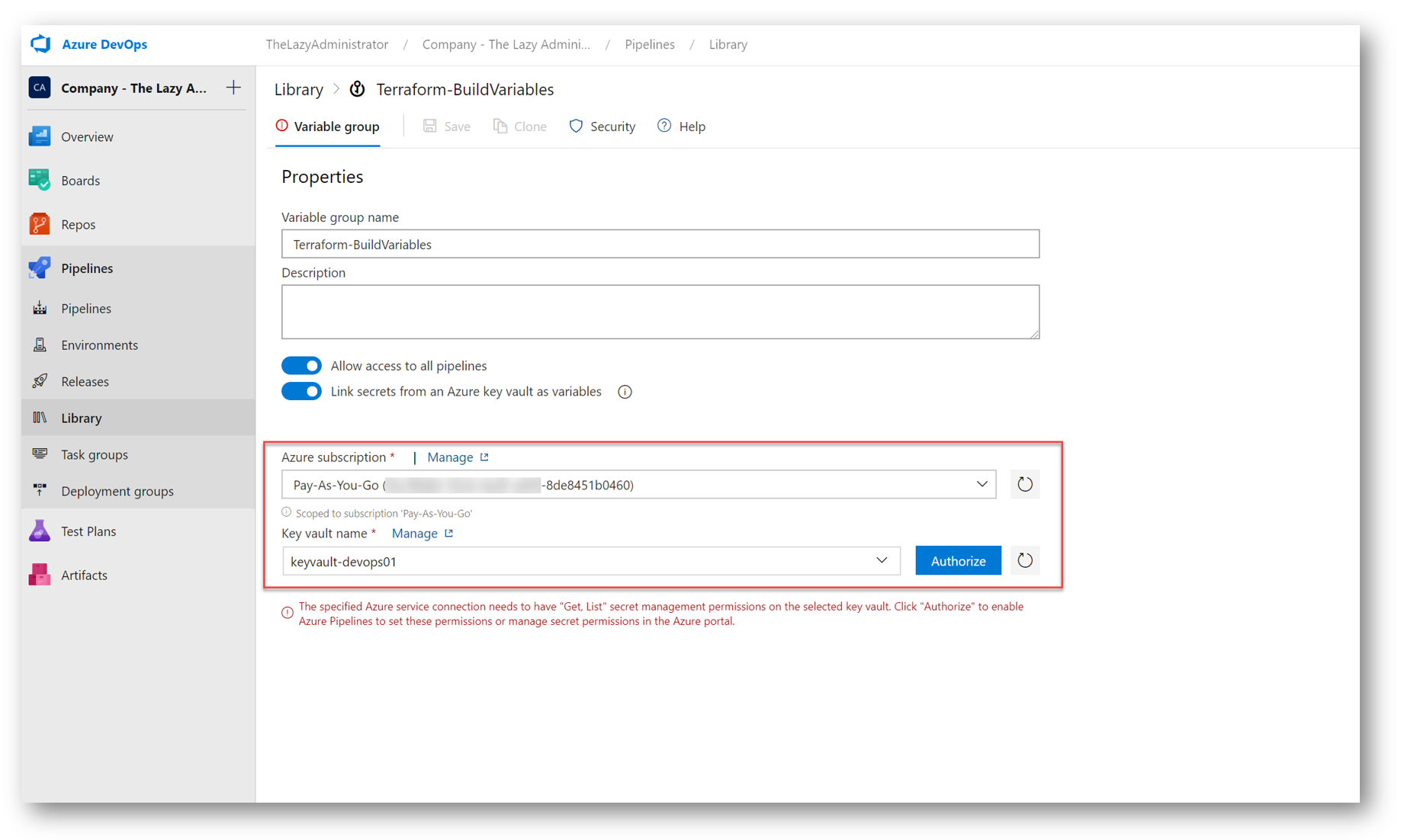

Next, select your subscription and your Key Vault you created earlier and stored all of your secrets in.

NOTE: You may have to press “authorize” to allow Azure DevOps access to your subscription and/or your Key Vault

Click the Add button and then select all of your secrets to import

Now I can see all of my linked key vault secrets to my variable group

Adding the Terraform Code to our Azure DevOps Repository

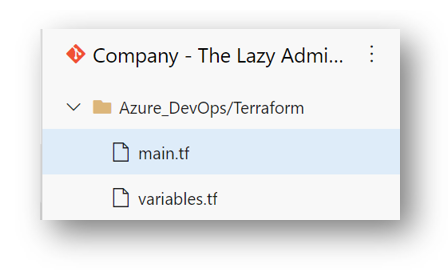

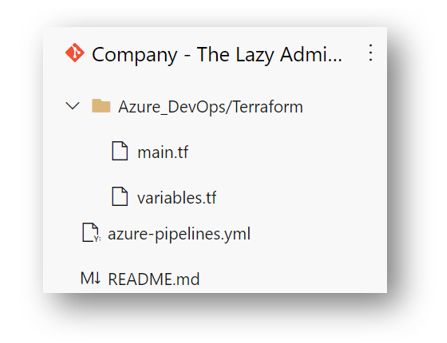

For development purposes, I cloned the repository I created above to my VSCode. I would highly recommend you do the same. I also created two folders in my repository to organize myself. This is not required. My repository has a folder structure like the following:

-(Root)

–Azure_DevOps

—Terraform (terraform is a child folder of the folder Azure_DevOps)

In our Terraform folder, we will create two files:

- main.tf

- variables.tf

variables.tf will contain all of our variables and their values. Main.tf will be our build-out / configuration terraform file. Modify the variables file (and possibly the main.tf file) to fit your needs best.

Variables.tf

Note: You can download all of my source files and view the structure on my GitHub

variable "client_secret" {

description = "Client Secret"

}

variable "tenant_id" {

description = "Tenant ID"

}

variable "subscription_id" {

description = "Subscription ID"

}

variable "client_id" {

description = "Client ID"

}

variable "environment" {

description = "The name of the environment"

default = "dev"

}

variable "location" {

description = "Azure location to use"

default = "north central us"

}

variable "virtual_network" {

description = "virtual network address space"

default = "10.0.0.0/16"

}

variable "internal_subnet" {

default = "10.0.2.0/24"

}

variable "office-WAN" {

description = "The WAN IP of the office so I can RDP into my test enviornment"

default = "181.171.126.253"

}

variable "vm_name" {

description = "The name given to the vm"

default = "vm-srv01"

}

variable "vm_size" {

description = "The size of the VM"

default = "Standard_A2"

}

variable "storageimage_publisher" {

description = "The OS image publisher"

default = "MicrosoftWindowsServer"

}

variable "storageimage_offer" {

description = "The OS image offer"

default = "WindowsServer"

}

variable "storageimage_sku" {

description = "The OS SKU"

default = "2019-datacenter"

}

variable "storageimage_version" {

description = "The OS image version"

default = "latest"

}

variable "manageddisk_type" {

description = "The managed disk type for the VM"

default = "Standard_LRS"

}

variable "admin_username" {

description = "The username for our first local user for the VM"

default = "bwyatt"

}

variable "admin_password" {

description = "The temporary password for our VM"

}

Main.tf

Note: You can download all of my source files and view the structure on my GitHub

IMPORTANT! If we did not include terraform { backend “azurerm” { } } that we see in the first 4 lines, our state file would not be able to be stored on the Storage Container and would be lost

terraform {

backend "azurerm" {

}

}

# Configure the Azure Provider

provider "azurerm" {

#While version is optional, we /strongly recommend/ using it to pin the version of the Provider being used

version = "=2.5.0"

subscription_id = var.subscription_id

client_id = var.client_id

client_secret = var.client_secret

tenant_id = var.tenant_id

features {}

}

resource "azurerm_resource_group" "resourcegroup" {

name = "rg-${var.environment}-resources"

location = "${var.location}"

}

resource "azurerm_virtual_network" "vnet" {

name = "network-${var.environment}"

address_space = ["${var.virtual_network}"]

location = "${azurerm_resource_group.resourcegroup.location}"

resource_group_name = "${azurerm_resource_group.resourcegroup.name}"

}

resource "azurerm_subnet" "networksubnet" {

name = "subnet-${var.environment}"

resource_group_name = "${azurerm_resource_group.resourcegroup.name}"

virtual_network_name = "${azurerm_virtual_network.vnet.name}"

address_prefix = "${var.internal_subnet}"

}

resource "azurerm_network_security_group" "nsg" {

name = "nsg-${var.environment}"

location = "${azurerm_resource_group.resourcegroup.location}"

resource_group_name = "${azurerm_resource_group.resourcegroup.name}"

}

resource "azurerm_network_security_rule" "nsgrule" {

name = "allow-rdp-from-main-office"

priority = 100

direction = "Inbound"

access = "Allow"

protocol = "Tcp"

source_port_range = "3389"

destination_port_range = "3389"

source_address_prefix = "${var.office-WAN}"

destination_address_prefix = "*"

resource_group_name = "${azurerm_resource_group.resourcegroup.name}"

network_security_group_name = "${azurerm_network_security_group.nsg.name}"

}

resource "azurerm_network_security_rule" "denyrdpall" {

name = "deny-rdp-all"

priority = 200

direction = "Inbound"

access = "Deny"

protocol = "Tcp"

source_port_range = "3389"

destination_port_range = "3389"

source_address_prefix = "*"

destination_address_prefix = "*"

resource_group_name = "${azurerm_resource_group.resourcegroup.name}"

network_security_group_name = "${azurerm_network_security_group.nsg.name}"

}

resource "azurerm_subnet_network_security_group_association" "sga" {

subnet_id = "${azurerm_subnet.networksubnet.id}"

network_security_group_id = "${azurerm_network_security_group.nsg.id}"

}

resource "azurerm_public_ip" "pip" {

name = "pip-${var.environment}"

resource_group_name = "${azurerm_resource_group.resourcegroup.name}"

location = "${azurerm_resource_group.resourcegroup.location}"

allocation_method = "Static"

}

resource "azurerm_network_interface" "nic" {

name = "nic-${var.environment}"

location = "${azurerm_resource_group.resourcegroup.location}"

resource_group_name = "${azurerm_resource_group.resourcegroup.name}"

ip_configuration {

name = "configuration"

subnet_id = "${azurerm_subnet.networksubnet.id}"

private_ip_address_allocation = "Dynamic"

public_ip_address_id = "${azurerm_public_ip.pip.id}"

}

}

resource "azurerm_virtual_machine" "vm" {

name = "${var.vm_name}"

location = "${azurerm_resource_group.resourcegroup.location}"

resource_group_name = "${azurerm_resource_group.resourcegroup.name}"

network_interface_ids = ["${azurerm_network_interface.nic.id}"]

vm_size = "${var.vm_size}"

# This means the OS Disk will be deleted when Terraform destroys the Virtual Machine

# NOTE: This may not be optimal in all cases.

delete_os_disk_on_termination = true

storage_image_reference {

publisher = "${var.storageimage_publisher}"

offer = "${var.storageimage_offer}"

sku = "${var.storageimage_sku}"

version = "${var.storageimage_version}"

}

storage_os_disk {

name = "disk-${var.vm_name}-os"

caching = "ReadWrite"

create_option = "FromImage"

managed_disk_type = "${var.manageddisk_type}"

}

os_profile {

computer_name = "${var.vm_name}"

admin_username = "${var.admin_username}"

admin_password = "${var.admin_password}"

}

os_profile_windows_config {

provision_vm_agent = true

enable_automatic_upgrades = true

}

}

back in my Azure DevOps repository, I can see my two files:

Install the Terraform Azure DevOps Extension

Next, we will need to install the Terraform extension from the marketplace. Navigate to the following link

Select: Get it for free

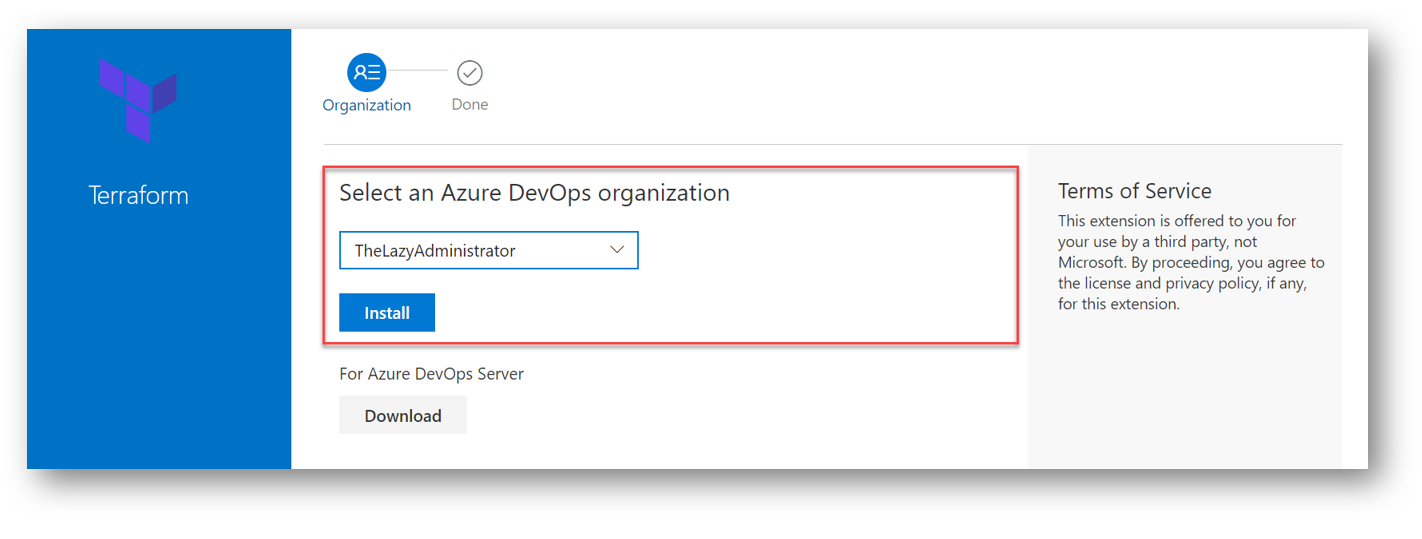

Select your Azure DevOps organization and then select Install

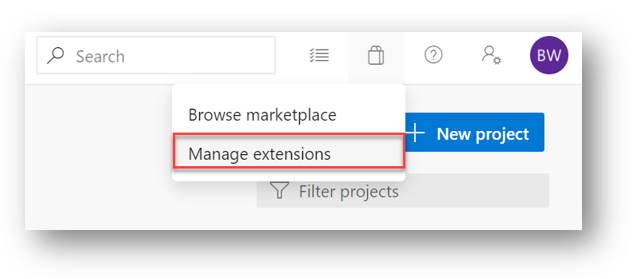

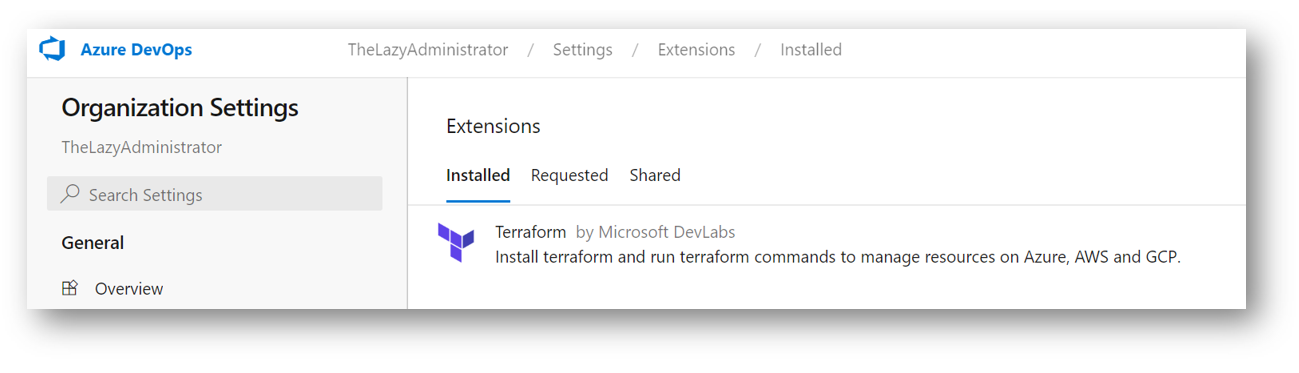

Back in Azure DevOps, if you click the little bag icon and select Manage Extensions, you will see the Terraform extension

Create the Azure DevOps Pipeline

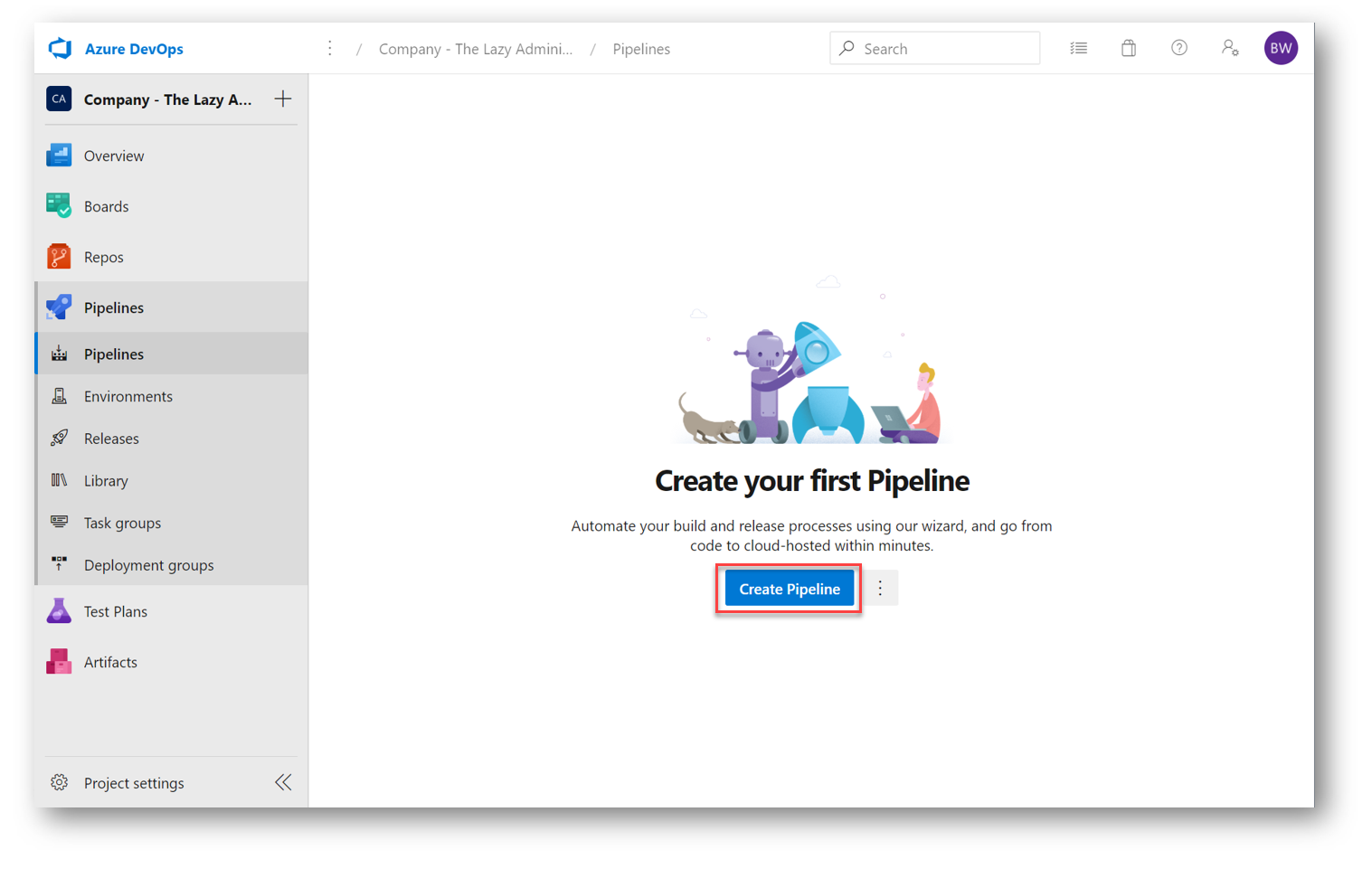

Now we are ready to create our Azure DevOps pipeline for our Terraform project. Proceed to dev.azure.com and enter your Team Project. In the left pane select Pipelines and then click Create Pipeline

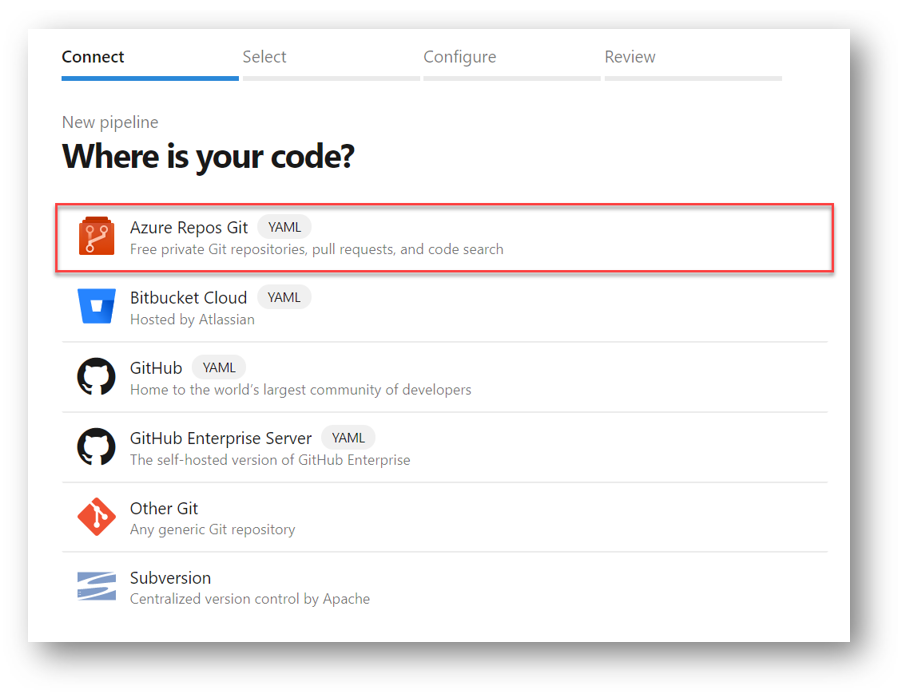

In the next pane, where it asks “Where is your code?” select Azure Repos Git

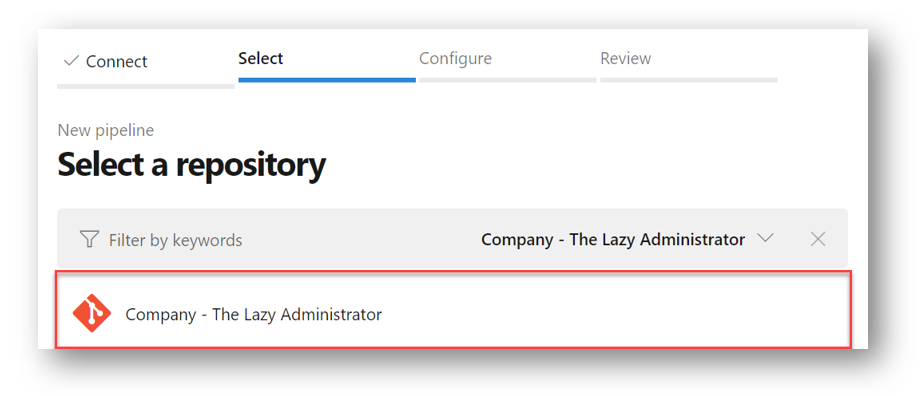

Under Select your Repository select the repo you created earlier where your Terraform files (main.tf and variables.tf) now live

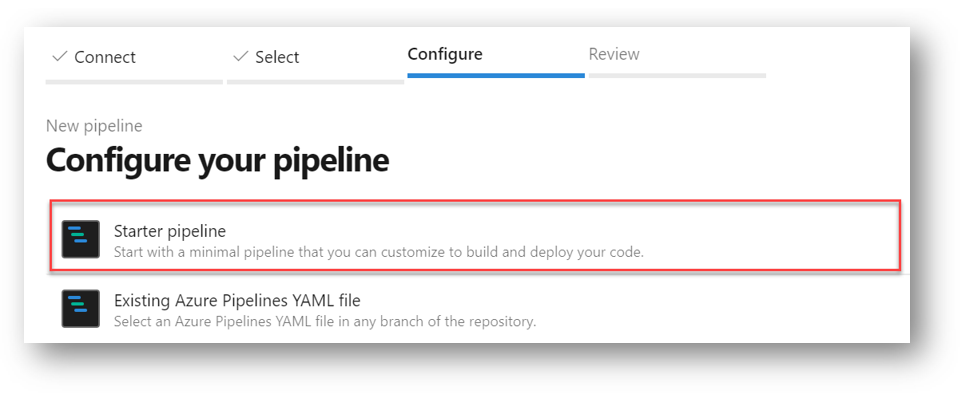

For Configure your Pipeline select Starter Pipeline

Erase the default text you see in the starter pipeline. Below is the YAML file with all of the code needed. You will need to enter your subscription ID (can be found under Subscriptions in the Azure Portal), Application ID (this is the application ID of your SPN), and Tenant ID (can be found in Azure Active Directory in the Azure Portal).

If you changed the name of the Resource Group, Storage Account, or Blob Storage Container, you would need to change their name in the Variables section.

YAML Configuration File

- Trigger: To make the Pipeline automatically run, we need to define a trigger. The trigger below causes the DevOps Pipeline to run when a commit is done on the Master Branch of our repo.

- Paths: ‘By default, if you don’t specifically include or exclude files or directories in a CI build, the pipeline will run when a commit is done on any file.’2 Since my project contains other files not relating to this Terraform project, I only want it to run when changes are done in my two terraform files ( variables.tf and main.tf)

- Pool: The VM that is going to run my code and deploy my infrastructure

- Group: The Variable Group containing my secrets and their corresponding values. These will automatically be imported in

- subscription_id: The subscription ID I am deploying my resources to

- application_id: The application ID of my service principal name

- tenant_id: The ID of my Azure tenant

- storage_accounts: The Storage Account that houses my Storage Container that contains my state file

- blob_storage: The Storage Container that will house my state file

- state_file: The name of my Terraform state file

- sa-resource_group: The Resource Group that my Storage Account is in

The steps are self-explanatory. First, I am installing Terraform to my VM that’s specified in the pool. After the install, I display the version of Terraform I am working with, the login to Azure using Az Login, and the credentials of my Service Principal Name. Notice that it is using some variables I did not define in my YAML configuration. That is because these are the variables from my Variable Group, and I am calling them by their name.

Example: sa01-azdo-accesskey is the name of the variable in my Variable Group

Finally, I am doing the terraform init, plan and apply

Note: You can download all of my source files (including this YAML file) and view the structure on my GitHub

trigger:

branches:

include:

- master

paths:

include:

- /Azure_DevOps/Terraform/variables.tf

- /Azure_DevOps/Terraform/main.tf

pool:

vmImage: "ubuntu-latest"

variables:

- group: Terraform-BuildVariables

- name: subscription_id

value: "5bc98db6-521c-1ed5-a023-8fe8r41b0460"

- name: application_id

value: "034j3d92-a6123-4c56-a03c-4348566f167c3"

- name: tenant_id

value: "63546b2c9-54e9-4f5e-9851-f00c4j323dc1f"

- name: storage_accounts

value: "sa01azuredevops"

- name: blob_storage

value: container01-azuredevops

- name: state_file

value: tf-statefile.state

- name: sa-resource_group

value: AzureDevOps

steps:

- task: ms-devlabs.custom-terraform-tasks.custom-terraform-installer-task.TerraformInstaller@0

displayName: 'Install Terraform'

- script: terraform version

displayName: Terraform Version

- script: az login --service-principal -u $(application_id) -p $(spn-azuredevops-password) --tenant $(tenant_id)

displayName: 'Log Into Azure'

- script: terraform init -backend-config=resource_group_name=$(sa-resource_group) -backend-config="storage_account_name=$(storage_accounts)" -backend-config="container_name=$(blob_storage)" -backend-config="access_key=$(sa01-azdo-accesskey)" -backend-config="key=$(state_file)"

displayName: "Terraform Init"

workingDirectory: $(System.DefaultWorkingDirectory)/Azure_DevOps/Terraform

- script: terraform plan -var="client_id=$(application_id)" -var="client_secret=$(spn-azuredevops-password)" -var="tenant_id=$(tenant_id)" -var="subscription_id=$(subscription_id)" -var="admin_password=$(vm-admin-password)" -out="out.plan"

displayName: Terraform Plan

workingDirectory: $(System.DefaultWorkingDirectory)/Azure_DevOps/Terraform

- script: terraform apply out.plan

displayName: 'Terraform Apply'

workingDirectory: $(System.DefaultWorkingDirectory)/Azure_DevOps/Terraform

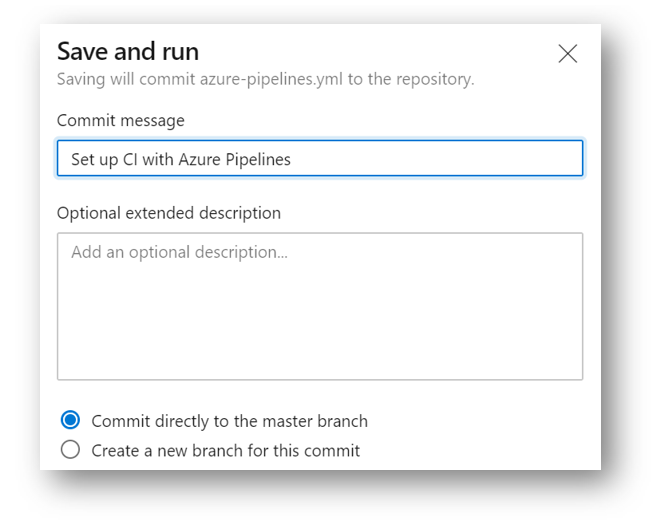

When you have finished with your YAML configuration file, select Save and Run

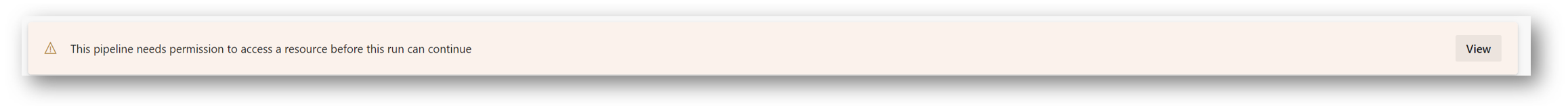

You may see the next warning, if not, then just monitor the job. But if you’re like me and you see the below warning, you will need to click View

and then click Allow

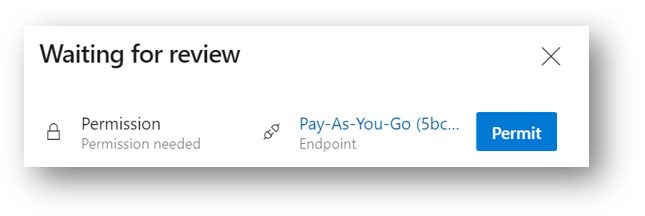

You will now see your new Pipeline and the current Job. We can see that mine already finished

If you click on Job, you can view each task of the job, the code output and the status of each part

If I look in the Azure Portal at my Storage Account, I can see my State file in my blob container

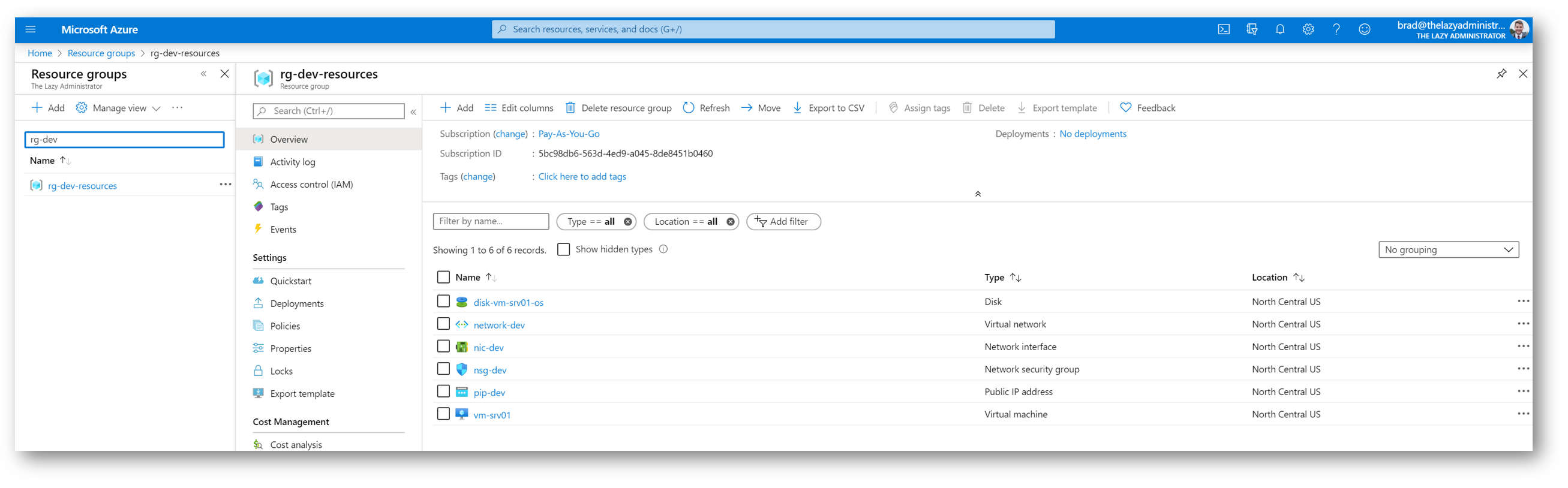

And if I go to the Resource Group that I had Terraform create, I can see it, and all of the other resources it created

Back in my Azure DevOps project, I can see the YAML pipeline file is now present as well

Continuous Integration/Continuous Deployment In Action

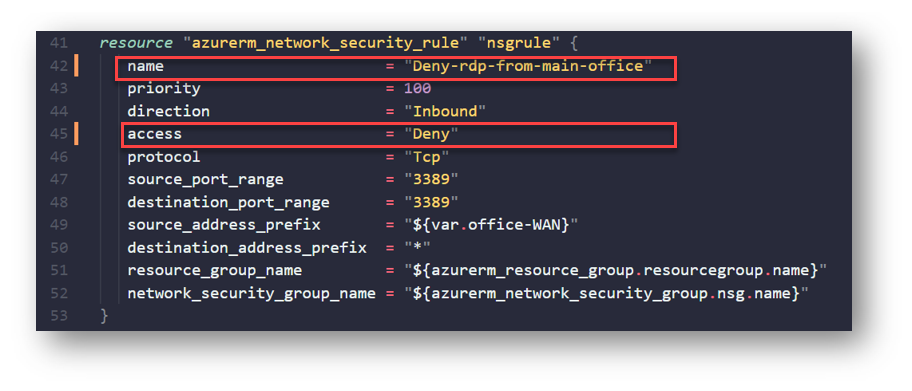

Now that I have my environment deployed and managed via Terraform and Azure DevOps, I can take advantage of CI/CD by merely making changes to my configuration file, and Azure DevOps + Terraform will take care of the rest. In my example, I am going to make a change to my Network Security Rule I have in my main.tf file. When I first deployed, I had two rules, one to allow RDP to my VM from my office WAN IP address, and another to deny RDP to everything else. Management as now told me that they do not want RDP to this server at all.

Since I have this repo synced to my laptop, I can just open VSCode and make a simple change to that area in the main.tf file. Below I changed it from Allow to Deny:

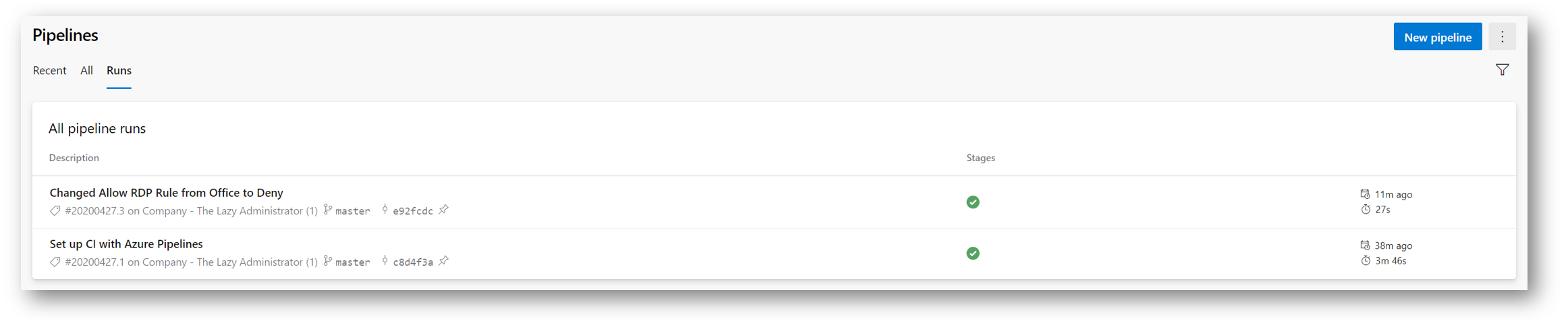

Then I just sync my changes and commit it to the Master branch with the commit message, “Changed Allow RDP Rule from Office to Deny.” Since my Pipeline is triggered on a commit to Master and either my variables.tf or main.tf files changed, it will automatically run. Going to the Pipeline, I can see its recent run:

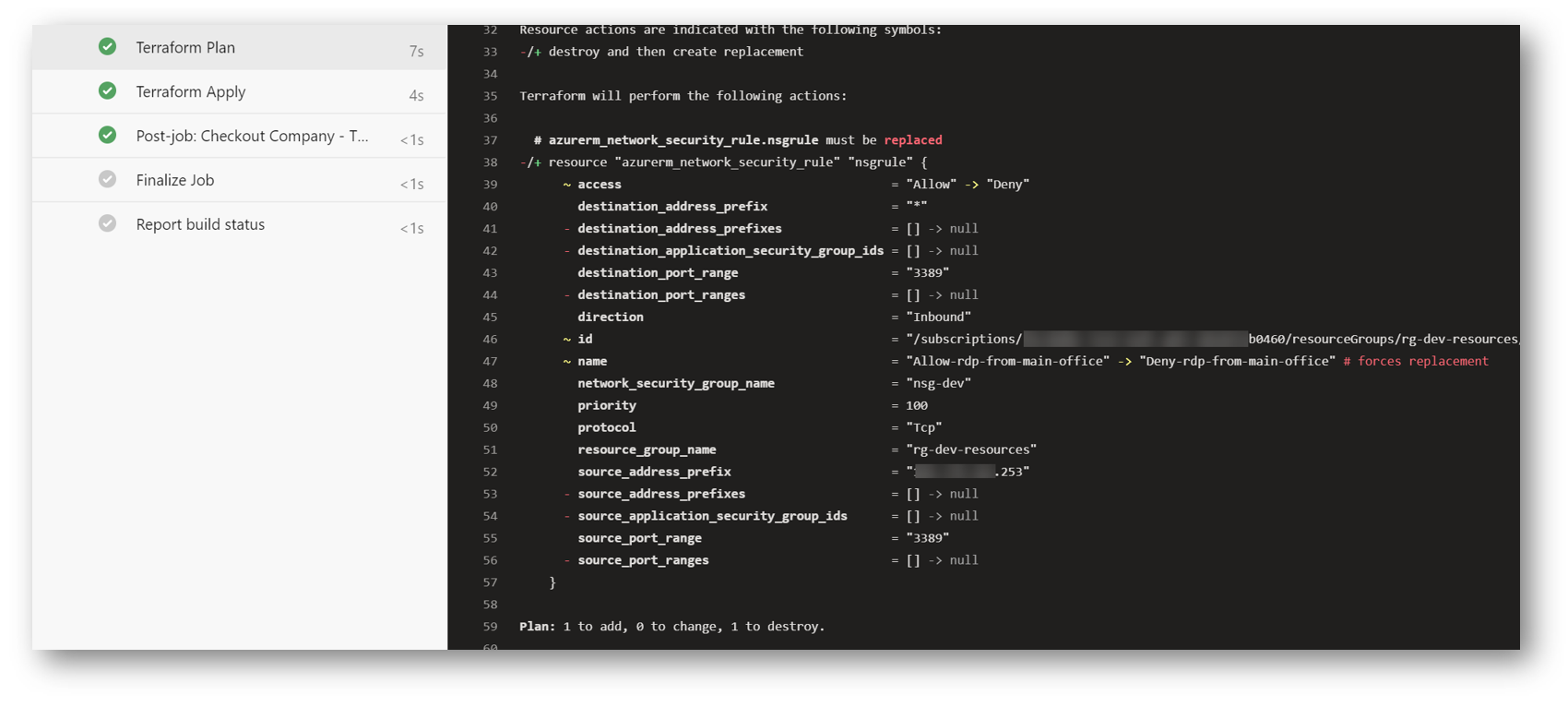

Digging into the run, I can see that during the Terraform Plan stage, it sees the rule that needs to be removed and shows us the rule it will be putting in. Terraform even shows us what parts of the rule changed from last time and what the new values are.

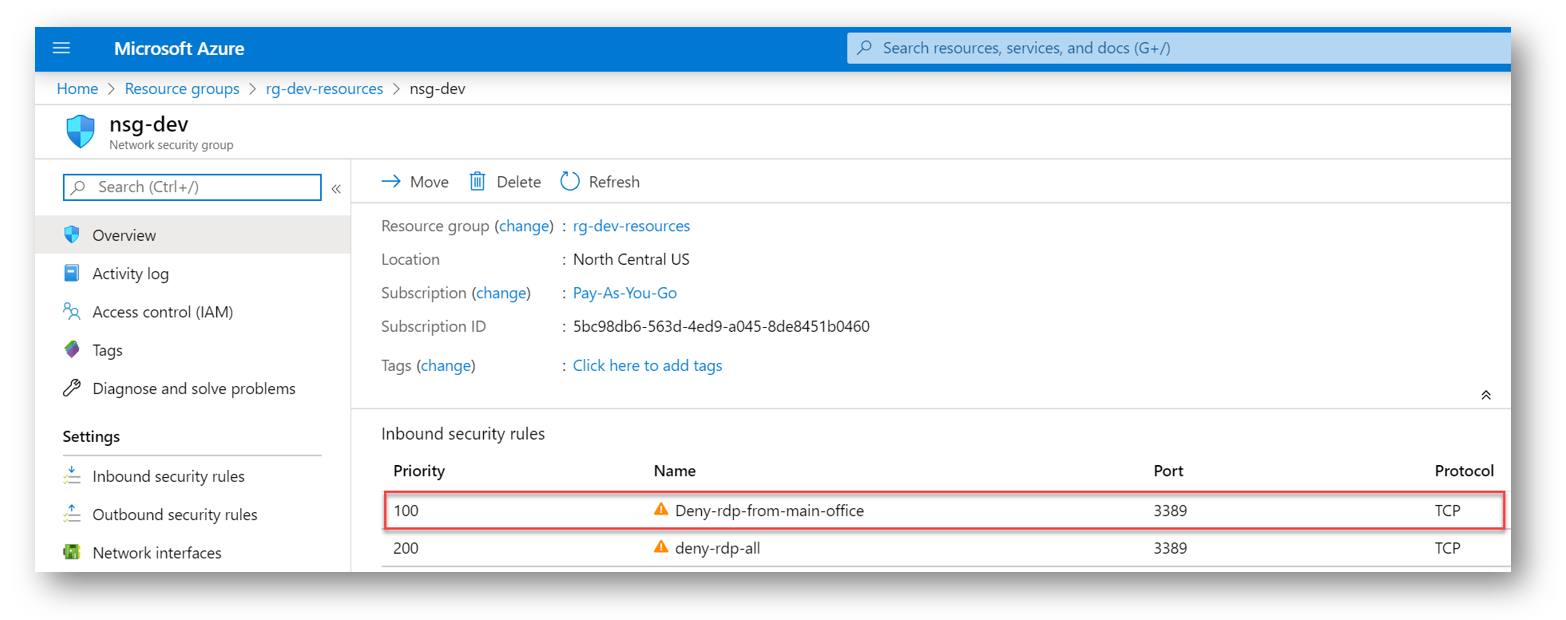

And in the Azure Portal, I can review the inbound network security rules and see my new rule

Summary

Now you can make any changes, additions, or deletions to this entire environment by just making the change via code and syncing it up to Azure DevOps. Because we configured the Pipeline to trigger on our updated files, it will automatically do all the heavy lifting for us. Since all of this is done in Git, we also have a rich history of changes that were made to our environment and by who. We can compare yesterday’s working build to today’s broken build and see what exactly was changed.

Sources

1: https://www.terraform.io/docs/state/index.html

2: https://adamtheautomator.com/azure-devops-pipeline-infrastructure/#the-trigger

My name is Bradley Wyatt; I am a 5x Microsoft Most Valuable Professional (MVP) in Microsoft Azure and Microsoft 365. I have given talks at many different conferences, user groups, and companies throughout the United States, ranging from PowerShell to DevOps Security best practices, and I am the 2022 North American Outstanding Contribution to the Microsoft Community winner.

8 thoughts on “Deploy and Manage Azure Infrastructure Using Terraform, Remote State, and Azure DevOps Pipelines (YAML)”

Thank you for the article, however I am not allowed to install the Terraform Azure Pipeline extension by Microsoft DevLabs into our company organization.

Please advise if it is still possible to perform the required Terraform tasks (download & install), then init/plan/apply etc without the extension installed (on a vs2017-win2016 Azure Pipeline agent), in order to deploy the infrastructure into Azure (using an existing Service Principal account.

Thank you

Hello,

thanks for sharing your excellent guide.

During the guide you made changes to existing resources and I could follow that OK. However, I’m trying to gain a better understanding of how I add more resources. For example, If I wanted to add another resource group containing similar infrastructure, how would I do that? I’ve been working with Terraform modules, but I’m still having problems with creating new resources rather than changing existing resources.

I don’t necessarily need the answer to my question, but if you happen to know of any guides or blogs that would be helpful it would be appreciated if you could share them.

Thank you.

if in your terraform configuration file you declare something, terraform will see it is not there and create it for you. Then it will become managed via Terraform using the state file

If I pass variable values to the script terraform wants to change existing resources, rather than create new ones.

Going into the config file to keep adding more code doesn’t seem very efficient.

Hi,

i simply wanted to say “thank you” for this detailed article. It really helped me a lot. Great work and well explained.